Downloads

Download

This work is licensed under a Creative Commons Attribution 4.0 International License.

Survey/review study

A Review of Techniques on Gait-Based Person Re-Identification

Babak Rahi 1,*, Maozhen Li 1, and Man Qi 2

1 Department of Electronics and Computer Engineering, Brunel University London, Uxbridge, Middlesex, UB8 3PH, United Kingdom

2 The School of Engineering, University of Warwick, Coventry CV4 7AL, United Kingdom

* Correspondence: babak.h.rahi@hotmail.com

Received: 16 October 2022

Accepted: 14 December 2022

Published: 27 March 2023

Abstract: Person re-identification at a distance across multiple non-overlapping cameras has been an active research area for years. In the past ten years, short-term Person re-identification techniques have made great strides in accuracy using only appearance features in limited environments. However, massive intra-class variations and inter-class confusion limit their ability to be used in practical applications. Moreover, appearance consistency can only be assumed in a short time span from one camera to the other. Since the holistic appearance will change drastically over days and weeks, the technique, as mentioned above, will be ineffective. Practical applications usually require a long-term solution in which the subject's appearance and clothing might have changed after the elapse of a significant period. Facing these problems, soft biometric features such as Gait has stirred much interest in the past years. Nevertheless, even Gait can vary with illness, ageing and emotional states, walking surfaces, shoe types, clothes types, carried objects (by the subject) and even environment clutters. Therefore, Gait is considered as a temporal cue that could provide biometric motion information. On the other hand, the shape of the human body could be viewed as a spatial signal which can produce valuable information. So extracting discriminative features from both spatial and temporal domains would benefit this research. This article examines the main approaches used in gait analysis for re-identification over the past decade. We identify several relevant dimensions of the problem and provide a taxonomic analysis of current research. We conclude by reviewing the performance levels achievable with current technology and providing a perspective on the most challenging and promising research directions.

Keywords:

gait feature extraction convolutional neural networks gait re-identification gait-recognition neural networks1. Introduction

The concern over the safety and security of people is continuously growing in recent years. It is no secret that governments are severely concerned with the security of public places such as metro stations, shopping malls, and airports. Protecting the public is an expensive and taxing endeavour. Consequently, governments seek the help of private companies and scientists to alleviate this pressure and provide better security solutions. With the rise of the COVID-19 pandemic, the need for better security solutions is even more evident. Video surveillance systems and CCTV cameras are crucial in optimising such efforts.

The abundance of security cameras and surveillance systems in public areas is valuable for tackling various security issues, including crime prevention. All the recordings from these video surveillance systems must be analysed by surveillance operators, which can be daunting. These operators need to analyse these surveillance videos in real-time and identify various categories of anomalies while looking for a "person of interest" who could easily change their appearance and be unidentifiable to a human with naked eyes. Intelligent video surveillance systems (IVSS) automate the process of analysing and monitoring hours of acquired videos and help the operators understand and handle them. Person re-identification (Re-ID) is one of the most challenging problems in IVSS, which uses computer vision and machine learning techniques to achieve automation.

In the world of computer vision and surveillance systems, person re-identification refers to recognising a person of interest at different locations using multiple non-overlapping cameras. In other words, identify an individual over a massive network of video surveillance systems with non-overlapping fields of view [1,2]. Person re-identification can also be defined as matching individuals with samples in publicly available datasets, which may have various positions, view angles, lighting or background conditions. This process can be performed on an image sequence or video frames that have been prerecorded or in real-time.

Person Re-ID problems arise when the subject moves from one camera view to another in a network of cameras since moving to another camera view could change their position as well as lighting and background conditions. Even the distance and the angle of the camera view, along with numerous other factors, such as unidentified objects and areas from one camera view to another, can affect the outcome of Re-ID.

Furthermore, person Re-ID is used in security and forensics applications to help the authorities and government agencies find a person of interest. There are three general steps to every person's re-identification solution. Segmentation determines which parts of the frames need to be segmented and focused on. The signature generation finds invariant signatures to compare these parts, and finally, comparison finds an appropriate method to compare these signatures.

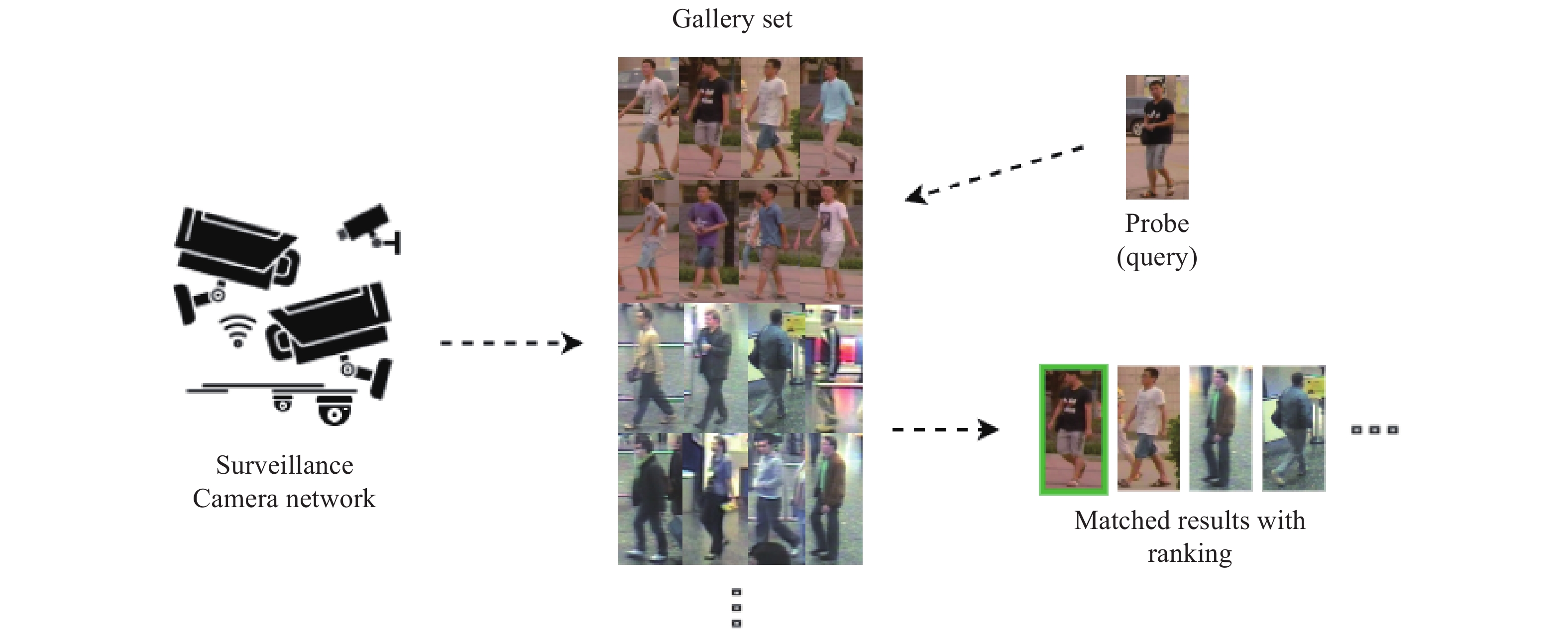

There are multiple methods for person Re-ID, but an image or sequence of the subjects is usually given as a Query or Probe. The individuals recorded by the camera network as a template gallery are given as frames. Descriptors are generated for both the template gallery and the probe and consequently compared, where the system gives a ranked list or percentage of similarity based on the probability and similarity of images or sequences to the Probe.

Figure 1 shows a very primitive person Re-ID pipeline in which the system tries to find the corresponding images for a given probe in a gallery of templates. Creating the gallery relies directly on how the re-identification solution has been set up, and [3] categorises this as single-shot and multiple shots, which indicates one or more than one template per frame, respectively. In the case of multiple shots, the new image will be used in the continuous person Re-ID solution, and each time the new image will be used as a probe for the next level.

Figure 1. A Basic person Re-ID framework.

Deep learning methods, in particular, convolutional neural networks (CNNs), are used in person Re-ID solutions to learn from a large number of data [4-6]. A CNN uses many filters to look at an image through a smaller window and create feature maps at each layer. Features maps are essentially the reported results of findings after the image has been run through the filter. A particular combination of low-level features can be an indication of more complex features, and feature maps can gradually capture higher-level features at each layer of the network [7].

There are various ways of training a deep neural network (DNN) model. Depending on the problem and the availability of adequate labelled data, these approaches can be categorised as supervised learning, semi-supervised learning and unsupervised learning. In the problem of person Re-ID, mainly for security and anti-terrorism applications, only a small amount of training data is available, so semi-supervised or unsupervised models might result in inaccurate solutions. Another categorisation of DNN person Re-ID approaches is based on their learning methodologies.

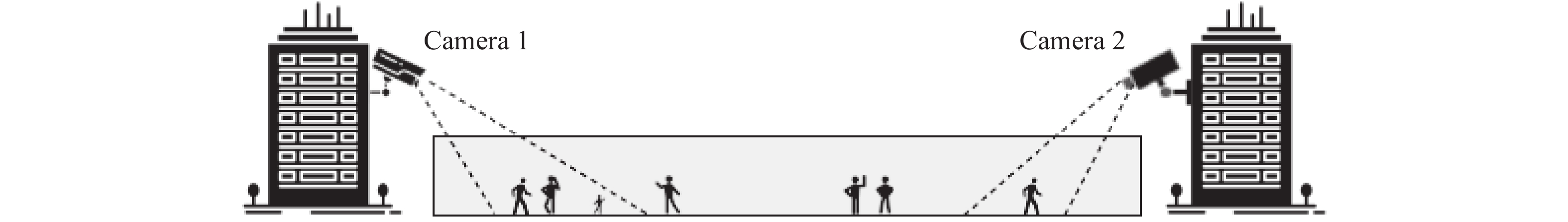

These methods can be also be categorised into short-term and long-term person re-identification according to the time interval. Figure 2 shows an example of a short-term person Re-ID in which camera-1 and camera-2 monitor the same walking path with non-overlapping surveillance views.

Figure 2. An example of short-term person Re-ID.

When the person of interest walks from camera view one to camera view two, short-term person Re-ID can bridge the gap between these two surveillance views. Given a video sequence (Probe) captured by camera one, person Re-ID tries to identify the same person while crossing the view of camera two. It then publishes a ranked list of images with descending probability of being the person of interest, similar to the primitive person Re-ID framework in Figure 1. Accomplishing this task requires four independent steps: human detection, human tracking, feature extraction, and classification. Together these four steps create a complete person Re-ID pipeline illustrated in Figure 3.

Figure 3. Pipeline of person Re-ID system.

The first two steps of this timeline are independent fields of research which are achieved by numerous methods; however, they are utilised in research to validate the results [8-16]. Feature extraction refers to learning specific properties that make training samples unique, and Classification is the process of matching the feature variables between the training data and the Probe.

Most methods focus on the short-term person Re-ID and use handcrafted or metric features and a single deep neural network solution. In this case, since the area between the two camera views is small, the appearance features will most likely stay the same, and the task of person Re-ID can be achieved by only relying on the appearance or metric features.

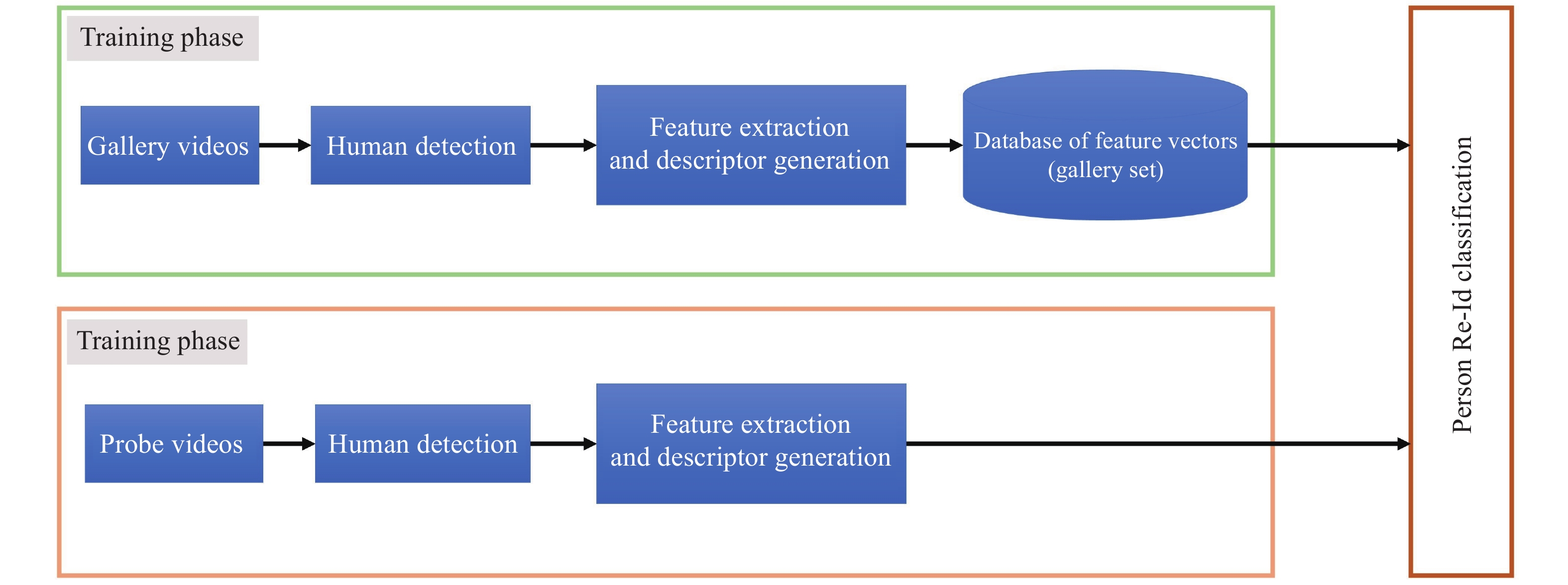

Figure 4 shows a typical person Re-ID system with an incorporated human detection and feature extraction step. This system contains a training phase in which a gallery set of feature vectors is generated. This gallery set includes features of all the individuals walking the path. In the testing phase, one descriptor for the person of interest is produced using the same method in the training phase. When the probe person enters the camera network, his feature vector is generated the same way as the training phase, and then compared against the gallery using a classification algorithm or similarity-matching technique. The system will then output the ID of the best-matched re-identified person.

Figure 4. Classic person Re-ID Systems.

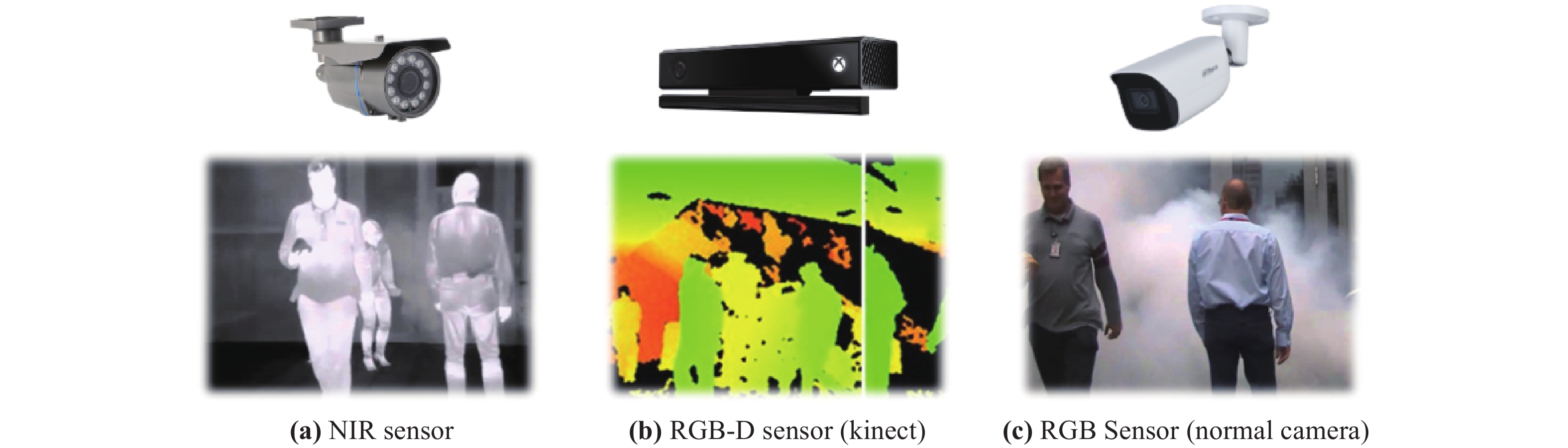

Another consideration is the type of sensors used for person Re-ID. Figure 5 shows the most popular sensors used in person Re-ID research. Near-infrared (NIR) cameras are primarily used in situations with low lighting, such as dark indoor conditions or at night [17]. RGB-D sensors or Kinects can acquire depth information which can be helpful for 3D reconstruction or depth perception but is used in very particular person Re-ID applications [18,19]. In practice, most surveillance systems work with the standard RGB cameras, so naturally, researchers focused on reducing the inter-class and intra-class variations on this type of sensors [20,21]. In short term Re-ID, RGB-D cameras are proven to have less performance and more cost than the standard RGB cameras, so they are not practical for widespread use in the real world.

Figure 5. Most popular sensors for person Re-ID.

Person Re-ID systems could be also characterised based on the type of inputs into image-based and video-based categories. In image-based, the system receives random frames as inputs and focuses on appearance features such as colour and texture since these features remain the same in short periods of time [22-27]. Video-based systems receive a video clip or a collection of successive frames as input. However, in addition to the appearance attributes, video-based systems also explore movement data to improve the performance of the system [28-35].

Short-term person Re-ID methods have achieved great accuracy on publicly available datasets. However, due to several inter-class and intra-class variations, they suffer from low performance and high cost. Subsequently, researchers try to reduce these variations and their effects. In the early years, the main focus was on handcrafting descriptors from images or videos. This is including but not limited to histograms of different colour spaces such as HSV, YCbCr, LAB and LBP which are extracted from overlapping features and then concatenated into single feature vectors, local patterns in binary forms [36-39], a collection of local features [40,41], maximal occurrence representation of local features [25], HOG3D [34], STFV3D [33], features learnt by convolutional neural networks [22,42,43], or a combination of the features above. Metric learning classification methods such as KISSME, local fisher and marginal fisher analysis, top push distance learning, nearest neighbour, NFST and quadratic discriminant analysis are also used to discriminate between the mentioned features [24,25,34,38,44-47].

According to a projection published by IHS markit, there are over one billion surveillance cameras installed around the world as of 2021 [48]. After China, the UK has one of the most substantial numbers of CCTV cameras globally. In 2015 the british security industry association (BSIA) estimated that between four to six million security cameras are installed in the country. London has the highest number of CCTV cameras in the UK. By that estimation, an average Londoner could get caught on camera three hundred times a day [49]. These cameras are used in places from home and shop surveillance to public areas such as airports, shopping centres, metro stations, and other forms of public transportation.

The main reasons behind this radical increase in the use of CCTV are the reduction in the price of cameras and the effectiveness of crime prevention. Utilising CCTV cameras in real-time, the police and security agencies can prevent incidents by detecting suspicious behaviour or gathering evidence such as identifying suspects, witnesses, and vehicles after a crime has been committed. Accordingly, the task of threat detection and person re-identification is left entirely to human operators. These security operators need to possess a high level of visual attention and vigilance to react to rarely occurring incidents. Moreover, many human resources are required to analyse the millions of hours of collected videos, thereby making this task very costly. It is even more taxing and time-consuming to search for a person of interest in thousands of hours of prerecorded videos which requires expert forensics specialists.

Automatic video analysis considerably reduces these costs, and for this reason, it became a critical field of research. This research field tackles problems including but not limited to object detection and recognition, object tracking, human detection, person re-identification, behaviour analysis and violence detection. Solutions to these problems have applications in numerous domains like robotics, entertainment, and to no small extent, video surveillance and security.

Person Re-ID is different from the classic detection and identification tasks since person detection is to distinguish a human from the background, and identification is the process of determining a person's identity in a picture or video. Detection indicates whether there is a person in the provided image, and Identification tells us who it is. However, person Re-ID determines whether an image in a video clip belongs to the same individual who previously appeared in front of the camera.

Usually, the assumption is that the subject wears the same clothing in different camera views, and the appearance stays the same for the re-identification task. This premise produces a significant limitation on the job since people can change their appearance and especially their clothing over the course of days, hours or even minutes. These alterations make re-identification based on appearance unlikely after a certain period of time. The hypothesis is that biometric features like faces or Iris are not always available in CCTV videos, especially after the rise of the COVID-19 pandemic.

Illumination changes, position variations, viewpoints and inter-object occlusions make appearance-based person Re-ID a notable problem. Most recent models use different features like the colour of clothes and texture to improve their performance. Typically they generate feature histograms, concatenate and finally weigh them according to their importance and distinguishing power [50,51]. These features can be learnt through multiple methods such as boosting, measuring distance metrics and rank learning [52,53]. The downside of these methods is their lack of scalability since the learning process needs constant supervision as the subjects change. It is a better practice not to bias all the weights to global features and give selective weights to more individually unique features such as salient appearance features or gate features such as walking speed and direction and the flow of movement. Human visual attention is studied in [20] and the results imply that attentional competition between features could take place not only based on the global features but also the salient features in individual objects.

To overcome the issues mentioned above, soft biometrics, such as Gait, has been used in the past. Gait is a biometric feature that focuses on a person's walking characteristics and motion features. Other biometrics, such as Iris and face could be altered using a pair of contact lenses or a simple surgical mask after the COVID-19 pandemic. Gait's advantages in video surveillance include the ability to extract features non-invasively from a distance, property acquisition from low resolution, and the ability to extract features even in the dark using different modality cameras. The two key metrics in Gait analysis are spatial and temporal parameters. Spatial and temporal features can describe the state of an object over time or a position in space. Moreover, in the past few years, several publicly available datasets have been published, which can be used to validate our research. In short, working on Gait as a biometric feature for person recognition and re-identification could be a massive help in the war against crime and even prevent terrorist attacks by recognising well-known terrorists and dangerous repeat criminals.

This paper introduces the methods used in Gait person Re-ID and reviews the literature surrounding the subject by dividing the person Re-ID algorithms into three different paradigms.

2. Applications and Challenges

2.1. Applications

Person Re-ID methods have much potential in a wide range of practical applications, from security to health care and even retail. For instance, cross-camera person tracking could understand a scene through computer vision, track people across multiple camera views, perform analysis on the crowd movement and do activity recognition. When the person of interest moves from one camera view to the other, the track will be broken. Therefore, person Re-ID is used to establish connections between the tracks to accomplish cross-camera tracking.

Another form of person Re-ID is tracking by detection, which uses person detection methods to perform tracking of a subject. This task includes modelling diverse data in videos, detecting people in the frames, predicting their motion patterns and performing data association between multiple frames. When person Re-ID is associated with the recognition task, a specific query image will be introduced and searched in an extensive database to perform person retrieval. This method usually produces a list of similar images or frame sequences in the database.

Person Re-ID is also used to observe long-term human behaviour and activity analysis, for example, maximising sales by observing customers' shopping trends, analysing their activities and altering products and even shopping floor layouts. In health care, it can be used to analyse patient behaviour and habits to assist hospital staff with a higher standard of care.

Other applications of the Gait person Re-ID have become more evident in recent years. For example, a considerable portion of human faces were hidden behind masks during the COVID-19 pandemic. Moreover, social or assistive robots carrying out daily collaborative tasks must know whom to reach. Person Re-ID using a biometric pattern such as Gait could replace identification cards or codes for critical infrastructure access control. Brittany which is a biometric recognition tool based on gait analysis and convolutional neural networks (CNNs) is presented in [54]. This could be expanded to autonomous vehicles where 3D motion data could be collected using LIDARs and cameras. Another use of Gait person Re-ID has been presented in [55], which proposes a smartphone-based gait recognition system that can recognize the subject in a real-world environment free of constraints.

2.2. Challenges

2.2.1. Appearance Inconsistency and Clothing Variations

Several challenges must be overcome to solve Person Re-ID's problem and use it in the above applications. Matching a person across different scenes requires dealing with class variations and confusion. The same person can undergo significant changes in appearance from one scene to another, or two different individuals can have similar appearance features across multiple camera views. These variations include Illumination variation, camera viewpoint variation, pose variation, low resolution, similar clothing, partial occlusion, real-time constraint, clothing change, accessories change, camera settings, a small training set and data labelling cost. Moreover, most models support short-term Re-ID in which they leverage colour and texture and the object carried by the subject. One of the most common challenges of appearance-based methods is the assumption that colours could be assigned to the same object under various lighting conditions, whereas achieving colour consistency under such conditions is not an easy task [56].

Another limitation is appearance consistency, which can only be assumed in a short time span from one camera to another. Since the holistic appearance will change drastically over days and weeks, the technique, as mentioned above, will be ineffective. Practical applications usually require a long-term solution in which the subject's appearance and clothing might have changed after the elapse of a significant period [57]. It is irrefutable that appearance-based short term person Re-ID techniques have made great strides in terms of accuracy in the past years. Still, problems such as massive intraclass variations and inter-class confusion caused by the conditions mentioned above make this a challenging and worthwhile field of research. Some of these challenges can be seen in well-known datasets such as PRID2011 [58], MARS [32], iLIDS-VID [59], DukeMTMC-reID [60].

2.2.2. Insufficient Datasets

Insufficient datasets are another challenge. Several publicly available datasets are out there, but none is large enough regarding the number of camera angles, number of subjects, or recorded period of time. Most datasets are usually recorded using two cameras and a small sample of subjects, and since deep learning models need an extensive training set and validation set, building realistic datasets would help further the progress of person Re-ID research.

2.3. Tackling the Challenges Using Generated Data

When training a model on one dataset and testing on another, more often than not, the performance drops significantly. To overcome this challenge, data augmentation techniques such as generative adversarial network (GAN) [61] have been designed in recent years to introduce large-scale datasets [62,63] or to expand sample data [60,64]. Another important research direction is the unsupervised person Re-ID models. Unlike supervised learning, these models do not train using labelled data in the same environment and have a lower annotation cost [65-67].

Since these features are not robust enough, some authors try to include other modalities, such as depth and thermal data, in their models using deep learning. These models are known as multi-modal methods and are particularly challenging in real life because a framework has to be developed that can handle multiple variations such as subject position and view obstructions [68,69]. Building architectures of deep learning models for person Re-ID is a very time-consuming task, so some researchers are using neural architecture search methods (NAS) to automate the process of architecture engineering [70,71]. The most crucial challenge in NAS methods is that there is no guarantee of how appropriate the CNN would be after NAS has chosen it.

2.4. Tackling Challenges Using Biometric Features

Facing all these problems, long-term approaches using Biometric features have been suggested by researchers in the past. Biometrics is the science of person identification based on physical and behavioural traits like body measurements and calculations related to human characteristics, which can be used to describe and label them [72]. Biometric surveillance identifies a person of interest by extracting features of all the people in the camera network and comparing them to the gallery set. The most commonly used biometrics are categorised as Hard Biometrics and Soft Biometrics. Some Hard Biometrics are fingerprint, iris, face, voice and palm print which do not change over time and are primarily used in access control systems. The acquisition of these biometrics demands a controlled environment and invasive measures. In video surveillance scenarios, people move more freely and without supervision, making it impractical to gather hard biometrics. To tackle the problem of long-term Re-ID, Soft Biometrics [73] like anthropometric measurements, body size, height or Gait are used with better success. These biometrics are more reliable for long-term Re-ID solutions, but also more challenging. Compared to hard biometrics, soft biometrics lack strong indicators of an individual's identity. Nevertheless, the non-invasive nature of Soft Biometrics and the ability to acquire information from a distance without the need for subject cooperation makes them a strong candidate for tackling person Re-ID.

2.4.1. Gait as a Soft Biometric Feature

Among Soft Biometrics, Gait is the most widespread feature for person Re-ID in surveillance networks. Other soft biometrics such as 3D face recognition are also considered, but Gait is more popular because first, it does not require contact with the subject, and the cues gathered from Gait are unique to each individual that are extremely hard to fake [74]. Gait can also work in low-resolution videos. Gait Re-ID gathers and labels distinctive measurable features of human movement just like any other biometric system. In psychology and neuroscience, focusing on Gait is an essential subject in humans' perception of others. For example, it is a known fact that in cases of prosopagnosia or face blindness, "the patients tend to develop individual coping mechanisms to allow them to identify the people around them, mostly via non-facial cues such as voice, clothing, gait and hairstyle recognition" [75-77]. Moreover, Gait analysis is a valuable tool for the diagnosis of several neurological disorders such as stroke, cerebral palsy, Parkinson's, and sclerosis [78-81].

2.5. Person Re-ID Using Gait

Person Re-ID based on Gait has received substantial attention in the past ten years, especially from the biometric and computer vision community, due to the advantages mentioned above. Gait as a soft biometric can vary with illness, ageing, changes in the emotional state, walking surfaces, shoe type, clothes type, objects carried by the subject, and even clutter in the scene. Moreover, Gait based person Re-ID problem should not be mistaken with Gait based person recognition since they are applied in entirely different scenarios. Recognition is employed in heavily controlled environments, often with a single camera, and the operator can influence conditions including but not limited to background, subject pose, angle of the camera and occlusion. On the other hand, the conditions in person Re-ID are entirely out of control. Since a large camera network is used to solve this type of problem, variables like lighting, number of people and occlusion, and the angle and direction of the walk are unknown. Gait lets us analyse a person of interest from various standpoints and poses. What makes Gait more attractive is the tendency to be used in long-term person Re-ID, relying on more than only spatial signals and appearance features. Gait is considered a type of temporal cue that could provide biometric motion information. Combinations of appearance and soft Biometrics have been used in the past to solve the problem of person Re-ID [24]. On the other hand, the shape of the human body could be considered a spatial signal which can produce valuable information. Several works have tried to extract discriminative features from both spatial and temporal domains [33,82,83].

2.5.1. Model Based vs Model-Free Gait Recognition

Early research on person gait recognition and Re-ID attempted to model the human body because Gait is essentially analysing the motion of distinct parts of the human body [84-86]. Specific characteristics pertaining to various body parts are extracted from each image in the sequence to form a human body model. Then, the parameters will be updated for each silhouette in the sequence and used to create a gait signature. These characteristics are usually metric parameters such as the length and width of a body part like arms, legs, torso, shoulders, heads along with their positions in the images, and all of them can be used to define the walking trajectory or the Euclidean distance between the limbs. Twenty-two such features are introduced in [87,88] where a posture-based method for gait recognition which learned posture characteristics by considering the displacement of all joints between two consecutive frames and a fixed centre of the body coordinate system for all the joints is proposed. A two-point gait representation was introduced in [86], which modelled the motion of limbs regardless of the body shape.

Other works, such as [89], create a motion model by considering the motion of hips and knees in various gait cycle phases. In this approach, the features are extracted from 2D images. Gait parameters, namely the magnitude and phase of the Fourier components, are extracted using a Viewpoint rectification stage. However, because of the low quality of surveillance images captured in the real world, it is implausible to calculate a model robust enough for widespread practical usage.

In conclusion, although large models achieve the best accuracy, they may consume a lot of memory and time when applied to existing video surveillance systems, therefore directly impacting the efficiency. To solve this problem, the trade-off between accuracy and efficiency must be considered in person Re-ID models.

3. Taxonomy of Gait Re-ID Methods

The Re-Identification of humans in digital surveillance systems is discussed in this section, and the current methods employed to tackle this problem are listed. Critically assessing these methods will help exploit the available labelled data from the publicly available datasets more efficiently and improve the training stage's efficiency in irregular Gait person Re-ID. It also shows a significant research gap in areas relating to irregular gait recognition and Re-Identification, which we face in practical scenarios rather than closed lab environments.

It can be assumed that Aristotle in De Motu Animalium (On the Gait of animals) was the modern gait analysis pioneer. A series of papers were published on the biomechanics of Gait for humans under unloaded and loaded conditions by Christian Wilhelm in the 1980s [90].

It is the cinematography's and photography's progression that make the capture of frame sequences (that reveal details of animal and human movements unnoticed by the naked eye) became possible with pioneering work in [91] and [92]. For example, the horse gallop sequence was normally distorted in painting before the discovery was exposed by aerial photography.

The production of video camera systems in the 1970s helped begin the extensive research and practical application of gait analysis on people with pathological diseases such as Parkinson's and cerebral palsy within a realistic time frame and with low cost. Based on the results obtained by gait analysis, orthopaedic surgery made significant advances in the 1980s. Many orthopaedic hospitals worldwide are currently equipped with gait labs to suggest and develop regimens and plans for doctors' treatment and scheduled follow-ups.

Human identification from a distance or non-intrusive human recognition has gained much interest in the computer vision research community. The Gait recognition study area addresses the identification of a person by the way they walk. Gait recognition proposes quite a few exclusive characteristics compared to other biometric methods. One characteristic that is most attractive to researchers is the unobtrusive nature of science. The subjects do not need to cooperate or pay attention to be identified. Also, no physical information is needed to capture human gait characteristics from a distance.

In this research area, complications may arise when multiple cameras are used to monitor an environment. For example, a person's position is known to us when they are within a single camera view, but problems might accrue as soon as the subject moves out of one view and enters another. This is the essence of the human re-ientification problem. How can the system detect the same person that appeared in another camera view earlier? The purpose of this section is to evaluate the gait-based methods of re-identification.

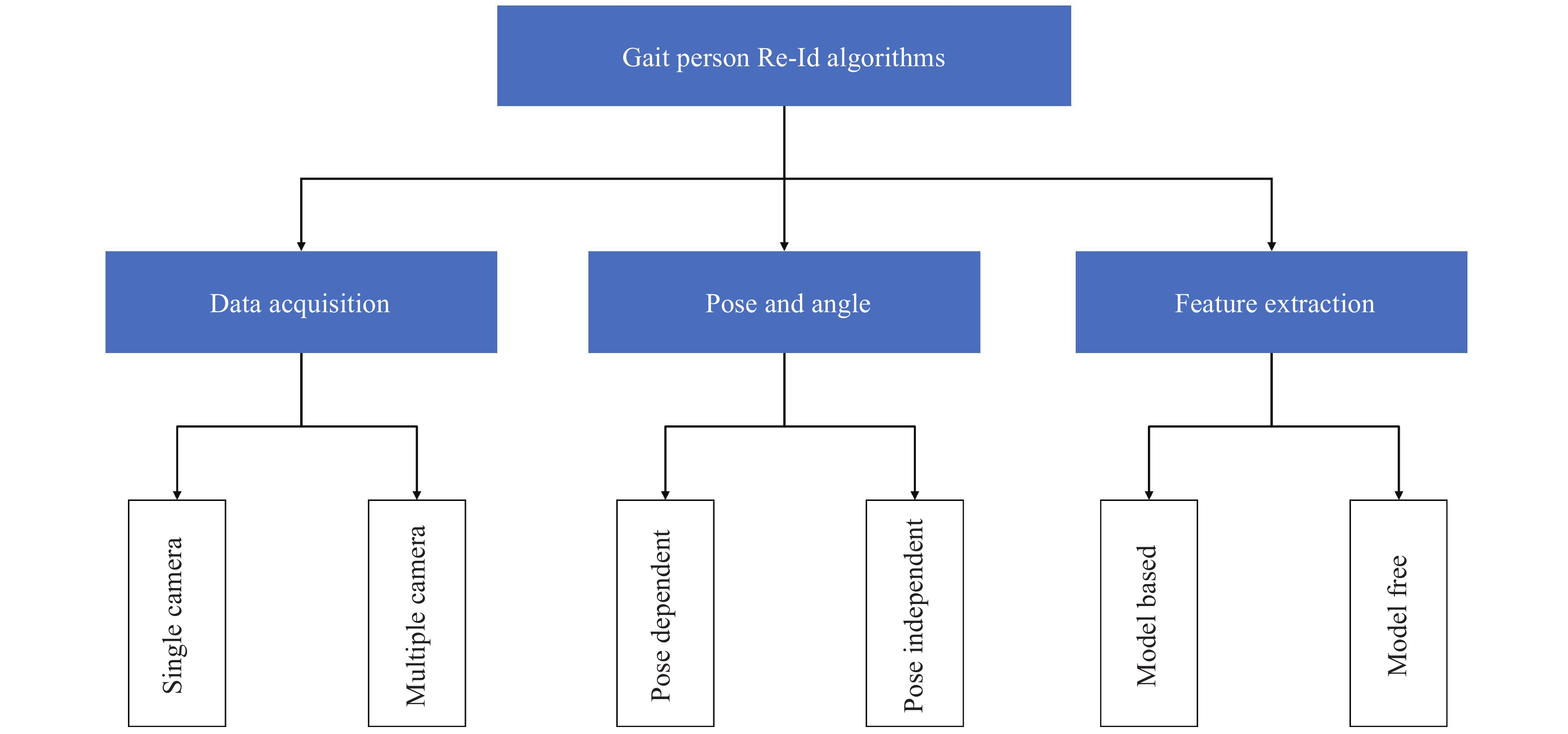

We classify the past algorithms into three paradigms to best grasp the state-of-the-art methods used in Gait person Re-ID. Figure 6 shows the overall classification of these algorithms. Finally, we will discuss these approaches and their strengths and drawbacks to better understand Gait Re-ID's challenges.

Figure 6. An overall classification of Gait person Re-ID methods.

3.1. Approaches to Data Acquisition

The number and types of cameras used in the raw data acquisition directly impact the person Re-ID algorithm. We can categorise these approaches using a single camera-acquired dataset and those using datasets gathered using multiple cameras.

3.1.1. Single Camera Approaches

Depending on the type of camera used, the algorithm could exploit two-dimensional (2D) or three-dimensional (3D) information. Motion capture (MOCAP) systems or depth sensors such as Kinect can directly acquire a 3D representation of the environment [93-96]. However, datasets generated from 2D cameras are the standard in most previous works [2,89]. A Re-ID scenario usually consists of multiple variations, including camera viewpoints, clothing, lighting and walking speed. When there are multiple cameras in the network, some could be used for the gallery and some for the probe set. Other variations could be used similarly to compare their effect on an algorithm. In most cases, the data used for gait recognition and Re-ID is a short video clip or a sequence of consecutive frames containing gait cycles. Some of the most popular publicly available Gait datasets are listed in Table 1.

Table 1 Details of some of the well-known publicly available datasets for gait recognition

| Name | Subjects | Location | Image Dimension | Views | Variations |

| TUM-GAID | 305 | Inside | 640 × 480 | 1 | Normal Back Pack Shoes |

| CASIA Dataset A | 20 | Outside | 352 × 240 | 3 | Clothing |

| CASIA Dataset B | 124 | Inside | 320 × 240 | 11 | Normal Back Pack Coat Lighting |

| SOTON (Large) | 115 | Inside/Outside | 20 × 20 | 2 | Clothing |

| CMU MoBo | 25 | Inside (Treadmill) | 640 × 480 | 6 | Slow Walk Incline Walk Ball Walk |

| OU-MVLP | 10,307 | Inside | 1280 × 980 | 7 | - |

| OU-ISIR, Treadmill A | 34 | Inside (Treadmill) | 88 × 128 | 1 | Speed |

| OU-ISIR, Treadmill B | 68 | Inside (Treadmill) | 88 × 128 | 1 | Clothing |

| OU-ISIR, Treadmill D | 185 | Inside (Treadmill) | 88 × 128 | 1 | Occlusion |

| i-LIDS (MCT) | 119 | Inside | 576 × 704 | 5 | Clothing Occlusion |

| iLIDS-VID | 300 | Outside | 576 × 704 | 2 | Lighting Occlusion |

| PRID2011 | 245 | Outside | 64 × 128 | 2 | Lighting Occlusion |

| KinectREID | 71 | Inside | Vary | 1 | - |

| MARS | 1261 | Outside | 1080 × 1920 | 6 | Lighting |

| HDA | 85 | Inside | 2560 × 1600 | 13 | - |

| USF | 25 | Inside | 640 × 480 | 6 | 32 Variations |

| Vislab KS20 | 20 | Inside | Only depth data | 1 | - |

Previous works usually use different combinations of camera views to simulate a real-world scenario even though datasets such as CASIA-B and SAIVT have multiple overlapping and non-overlapping camera views [97]. Only one camera is used for the Probe when running the system, and the rest are used to create the gallery. This approach was used in [98,99] on PKU, SOTON and CASIA, which contain multiple camera viewpoints. The i-LIDS dataset with two different camera views is used in [89]. They used one camera for the Probe and the other for the gallery set. A random fifty-fifty split for each sequence in the dataset regardless of the camera view and tested this approach on iLIDS-VID, HDA+ and PRID2011 datasets is used in [29].

3.1.2. Multiple Camera Approaches

Some previous works used overlapping camera views, but due to constraints in calibration and installation of multiple cameras simultaneously, such works are scarce in the literature. One example of such works is [100], in which they used 16 overlapping cameras to create a 3D gait model of a human. The 3D gait models were generated by the volume intersection of silhouettes extracted from walking frame sequences. Then, random viewpoints from these synthetic images were chosen to create the gallery. Using kinect and MOCAP system will considerably reduce the amount of computation and complexity of the problem since they can acquire the 3D data directly and use the same camera for both Probe and gallery sets [95-101,103]. In these works, people walk in arbitrary directions or side to side in front of the same Kinect 3D sensor. MOCAP systems for person Re-ID is used in [93, 94]. In [93], they used the dataset of Carnegie Mellon University. This dataset was collected using 12 vicon infrared MX-40 cameras with people wearing marked black clothes. The markers were only visible in infrared and were used to produce the 3D information.

Although in the past few years, there was a paradigm shift towards methods with overlapping viewpoints with kinect or MOCAP systems. Nevertheless, there is still an overwhelming amount of research on 2D surveillance networks since the use of such sophisticated devices (which can acquire direct 3D motion information) is not yet incorporated in the real world.

3.2. Approaches to Pose and Angle

In automatic surveillance systems, the pose of a person or human pose is determined by the direction of the walk and the camera viewpoint. Depending on the pose, the dynamic and static information acquired from the image sequence can change when switching between camera views or when a period of time has passed. Conditional on the human pose, pose-dependent and pose-independent approaches are reported in the literature. In pose-dependent, the data applied to the person Re-ID system is restricted to only one direction or camera view, but in pose-independent, any arbitrary viewpoint or direction could be used as the input of the system.

3.2.1. Pose-Dependent Approaches

Pose-dependant approaches are most useful for indoor scenarios where walking directions will not change in relation to the camera, such as station entrances and shopping centre corridors. Numerous publicly available datasets, including iLIDS-VID, CASIA, PRID2011 and TUM-GAID, support the pose-dependant approach. Single camera viewpoint approach has been used in [102−104]. In [102] both side and top views are used, and in [103,104] they use frontal view, but most past research is focused on the lateral (side) human view because it enables a more clear observation of the human Gait and provides a consistent amount of self-occlusion in each frame [83,105]. Some works choose the system inputs based on the viewpoints provided by the dataset [98,99] for example, lateral for CASIA and TUM-GAID and frontal and back for PKU.

3.2.2. Pose-Independent Approaches

Pose-independent or cross-view are among the most practical methods for real-world applications because the human walk's direction is most likely arbitrary in an uncontrolled data acquisition setup. The viewpoint variation puts a higher computational strain on the person Re-ID system. On the other hand, the input data must be of a higher quality than approaches dependent on only one view. The gallery set in these approaches is constructed from random data collected from arbitrary walking directions, which will be put against a random probe set for testing. For example, [99] produces the gallery using all the 11 viewpoints in CASIA Dataset B and then tests the algorithm by choosing a random probe image from the same datasets. The same technique is used in [29,106] and [2,89], where the gallery set is created from random viewpoints. A view transformation model (VTM), which exploits projection techniques to tackle pose variation problems is provided by [89]. Models such as VTM are utilised to transform multiple data samples into the same angle. Similarly, sparsified representation-based cross-view model and discriminative video ranking model were used by [97] and [29], respectively.

The recent RealGait model [107], which sets a new state-of-the-art for gait recognition in the wild, is a pose-independent deep network model for cross-scene gait recognition. In this paper, they constructed a new gait dataset by extracting silhouettes from a real person Re-ID challenge in which the subjects are walking in an irregular manner. This dataset can be used to correlate gait recognition with person re-identification.

Furthermore, 3D data can reduce the computational cost of view alignment using simple geometrical transformation like [100], which creates 3D models from 2D images that multiple overlapping cameras have acquired. Synthesising these models generated virtual images with random viewpoints and constructed a gallery. A probe was chosen from authentic images collected by a single-view camera to test the algorithm. MOCAP was also employed by [94] and [108] to collect 3D data for a pose-independent person Re-ID system. Since kinect can provide a 3D volumetric representation of the human body, some works such as [95] use skeleton coordinates provided by kinect to demonstrate the impact of viewpoint variation on Gait person Re-ID systems. They use these findings in [101], and [109] in which they proposed a context-aware Gait person Re-ID method that used viewpoints as the context in their study.

3.3. Approaches to Feature Extraction

3.3.1. Human Gait Cycle

Gait signature or Gait feature is the subject's unique characteristics extracted from a sequence of sample images over a Gait cycle or stride. To understand the Gait cycle, we need to analyse, isolate and quantify a unique short and repeated task when someone walks. A Gait cycle can be measured from any gait event to the same gait event on the same foot. However, it is implied in the literature that a Gait cycle should be measured from the strike of one foot to the ground to the next strike of the same foot in a person's walking pattern. The Gait cycle is considered the fundamental unit of Gait. By measuring its temporal and spatial aspects, we can extract Gait signatures for a particular person of interest.

There are two primary phases of Gait cycle: the "swing" phase and the "stance" phase. These phases alternate and repeat when a person walks from point A to point B. The stance phase is the duration when the foot is on the ground, and the swing phase is the whole time when the foot is in the air. Consequently, observing the movements of two lower limbs is imperative in extracting spatial and temporal features. A breakdown of a gait cycle is presented in [110]. As the paper illustrates, when both limbs are in the stance phase simultaneously, the legs are in bipedal or double support, and when only one leg is in the stance phase, it is in the uni-pedal or single support sub-phase. According to [111], the swing phase has four sub-phases. (1) Pre-swing, which is when the foot is pushed off the ground, and the transition between the stance phase and swing phase happens, (2) Initial swing, when the foot clears off the ground. (3) Mid swing in which advancement of the foot continues, and (4) terminal swing that the foot is back in the beginning position of the gait cycle on the ground.

The traditional feature extraction algorithms in the literature are divided into two categories: model-based and model-free (motion-based) approaches. The model-based methods use the kinematics model of human Gait, and the model-free or motion-based approach finds correspondence between sequences of images (optical flow or silhouette) of the same Person to extract a gait signature. Table 2 shows examples of different works using model-based and model-free approaches. Another feature extraction category is based on deep learning, which has become more prevalent due to better performance and less complexity in discovering gait cycles in real-world situations.

Table 2 Details of some traditional methods in the literature

| Method | Approach | Feature | Feature Analysis Technique |

| [93] | Model-Based | 3D joint info | MMC PCA LDA |

| [89] | Model-Based | Motion model | Haar-like template for localization and magnitude and phase of fourier components for gait signature and KNN classifier |

| [104] | Model-Free and Model-Based | Fusion of depth information | Soft biometric cues and point cloud voxel-based width image for recognition; LMNN classifier |

| [33] | Model-Free | 2D Silhouettes | Gait and appearance features combined |

| [105] | Model-Free | Silhouettes | STHOG and colour fusion |

| [103] | Model-Free | Optical flow | HOFEI |

| [101] | Model-Based | 3D joint info | Context based ensemble fusion and SFS feature selection |

| [106, 29] | Model-Free | 2D Silhouettes | ColHOG3D |

| [98] | Model-Free | 3D joint info | Swiss system based cascade ranking |

| [83] | Model-Free | 2D Silhouettes | Virtual 3D sequential model generation |

| [97] | Model-Free | 2D Silhouettes | GEI-FDEI HSV |

| [100] | Model-Free | 2D Silhouettes | Virtual 3D sequential model generation |

| [102] | Model-Free | Point cloud | Frequency response of the height dynamics |

3.3.2. Model-Based Approaches Based on the Human Body

Early research on Person gait recognition and Re-ID attempted to model the human body because Gait essentially analyses the motion of distinct parts of the human body [84-86]. In these approaches, the silhouettes are obtained from 2D images by background subtraction and Binarisation. Specific characteristics pertaining to various body parts are extracted from each image in the sequence to form a human body model. Then, the parameters will be updated over time for each silhouette in the sequence and used to create a gait signature. These characteristics are usually metric parameters such as the length and width of a body part like arms, legs, torso, shoulders, head and their positions in the images. They can define the walking trajectory or the Euclidean distance between the limbs. Twenty-two such features are introduced in [87]. A posture-based method for gait recognition which learned posture characteristics by considering the displacement of all joints between two consecutive frames and a fixed centre of body coordinate system for all the joints is proposed in [88]. A two-point gait representation was introduced in [86], which modelled the motion of limbs regardless of the body shape. Other works such as [89] create a motion model by considering the motion of hips and knees in various phases of a gait cycle. In this approach, the features are extracted from 2D images. Gait parameters, namely the magnitude and phase of the Fourier components, are extracted using a Viewpoint rectification stage.

Because of the low quality of surveillance images captured in the real world, it is implausible to calculate a model robust enough for widespread practical usage. In recent years depth sensors (i.e. Kinect) and MOCAP systems made modelling the human body more manageable. With the help of a Kinect [112] extracted comprehensive gait information from all parts of the body using a 3D virtual skeleton. Other works such as [104] employed this method in gait recognition and automatic Re-ID systems. Two kinects RGB-D cameras were used in [104] to acquire information from the person's frontal and back view. Since kinects have a limited range for sensing depth, they each captured only a part of a subject's gait cycle, so they fused the information from all the sensors. The authors used the kinect software development kit to compute a set of soft biometric features for person Re-ID when the subject moves from one kinect to the next, and then feature vectors called width images were constructed at the granularity of small fractions of a Gait cycle.

3.3.3. Model Free Approaches

The model-free approaches can again be categorised into sequential and spatial-temporal motion-based methods. Sequential Gait is presented as a time sequence of the person's poses. The sequential model-free approach was first proposed in [113] in which temporal templates represent the motion. The temporal templates spot the motion and record a history of these movements. In a spatial-temporal model-free approach, Gait is represented by mapping the motions through time and space [114]. Different spatial-temporal methods are proposed in the literature, and almost all of them use silhouette sequences. Gait energy image (GEI), first introduced in [115], is a spatial-temporal representation of Gait that characterises the human walk properties for individual gait recognition, where a single image template is produced by taking the average of all binary silhouettes over the entire gait cycle.

The authors in [115] show how GEIs are generated from sequences of human walk silhouettes. For binary gait silhouette images  , the Gray level GEI is computed using the below formula where N is the number of frames in a sequence, t is the moment in time (frame number), and

, the Gray level GEI is computed using the below formula where N is the number of frames in a sequence, t is the moment in time (frame number), and  are the position coordinates in a 2D image. GEIs and their variations became the primary feature for many gait person Re-ID research, including [97]. In this work, GEI and its variation called frame difference energy image (FDEI) were used as gait features. Other works such as [103], and [83] also used histogram of flow energy images (HOFEI) and pose energy images, respectively. In [33] gait energy images and appearance features such as HSV histograms were fused to deliver a spatial-temporal model [115].

are the position coordinates in a 2D image. GEIs and their variations became the primary feature for many gait person Re-ID research, including [97]. In this work, GEI and its variation called frame difference energy image (FDEI) were used as gait features. Other works such as [103], and [83] also used histogram of flow energy images (HOFEI) and pose energy images, respectively. In [33] gait energy images and appearance features such as HSV histograms were fused to deliver a spatial-temporal model [115].

Optical flow is also used as a feature in several past works. Optical flow images (GFI) were proposed in [116] for the first time in 2011. The basis of GFIs was also sequences of binary silhouette images. The process of GFI generation was depicted and presented in [116]. Variations of optical flow-based methods appeared in the literature in the next years. A pyramidal fisher motion for multi-view gait recognition was used in [117] as descriptor for motions based on short-term trajectories of points. Other methods such as [118] and [119] are also based on silhouettes. A partial similarity matching method for Gait that could construct partial GEIs from 2D silhouettes was proposed in [118]. In addition, [119] generated virtual views by combining multi-view matrix representation and randomised kernel extreme learning. Spatial-temporal histogram of oriented gradient (STHOG) is proposed in [105] where it was suggested that the STHOG feature can represent both Motion and shape data. A syntactic 3D volume of the subject from images acquired by multiple overlapping 2D cameras from which features were extracted for gait recognition was generated in [100].

3.3.4. Fusion of Modality Approaches

Re-ID results are improved by combining multiple features. Gait-based features have been successfully combined with other types of features in Re-ID.

3.3.5. Deep Learning-Based Approaches

Deep learning is a subarea of machine learning (ML) that tries to train computers on learning by example. This technique has been used before and is the key to technologies such as driverless cars, detecting a stop sign or distinguishing an object from a human being. Other applications can be in voice control devices such as google assistant, Apple Siri and Amazon Alexa projects. Deep learning is getting much attention from the research community because its results were not previously possible.

In this method, a computer model learns how to perform classifications using the training it previously got from sound, text, images or video frames and gains unbelievable accuracy that is sometimes better than humans. Vast collections of labelled data and neural networks with various levels are used to train deep learning models. Deep learning receives such impressive recognition because of its higher than before accuracy level. This helps big companies keep the users happy, meet their expectations, and decrease safety concerns in more critical projects like driverless cars. Recent developments show that deep learning transcends human beings in tasks like feature extraction in images.

In recent years deep learning approaches to gait recognition have been progressing fast [120,121]. The idea of a siamese convolutional neural network (SCNN) was introduced in [122] and utilised in numerous research after that [123-125]. A Siamese network uses multiple identical sub-networks with the same weights and parameters to find a relationship between two related things. By mapping Input patterns into a target space and calculating their similarity metrics, they can discriminate between objects. If the objects are the same, the metrics will be small, and if they are different, the metrics are significant. Some applications of Siamese networks are face recognition, signature verification, and in recent years person re-identification [125-127]. Siamese frameworks are suitable for gait recognition scenarios since there are usually many categories and a small volume of sample data in each category. Siamese CNN for gait feature extraction and person Re-ID was first mentioned in [120] and then in [128]. Based on that [121] proposed a CNN-based similarity learning method for gait recognition, and [129] designed a cycle-consistent cross-view gait recognition method by using generative adversarial networks (GANs) to create realistic GEIs. To handle angle variations, authors in [119] proposed localised Grassmann mean representatives with partial least squares regression (LoGPLS) method for gait recognition, and authors in [130] offered an autocorrelation feature which is very close to the GEI. Multi-channel templates for Gait called period energy images (PEI) and local gate energy images (LGEI) with a self adaptive hidden markov model (SAHMM) were introduced in [131] and [132], respectively.

All of the above works used GEIs as input data for their systems. As mentioned before, in the process of generating GEIs from gait sequences, a vast amount of Gait temporal data will be lost, so in more recent works, attention mechanisms [133,28], and pooling approaches [134,135] were used. A sequential vector of locally aggregated descriptor (SeqVLAD) was proposed in [133] which combined convolutional RNNs with a VLAD encoding process to combine spatial and temporal information from videos. Sparse temporal pooling, line pooling and trajectory pooling were also used in various works to extract gait features [134,135]. Some works, for example, [136] and [137] used raw silhouette sequences instead of GEI or its variations to preserve the temporal features. In [137] they used ResNet and Long-Short Term Memory (LSTM). GANbased methods were used in recent works on large-scale datasets [130,138,139]. Researchers in [140] even used RGB image sequences instead of silhouettes with auto-encoder and LSTM networks. Liao et al. [141] even proposed a model-based gait recognition method with the use of CNNs, which did gait recognition as well as predicting the angle. A list is provided in Table 3 of some more important deep learning methods along with their features and techniques.

Table 3 Details of most notable deep learning methods in the literature

| Method | Feature Analysis Technique | Feature |

| [141] | Convolutional Neural Networks (CNNs) | Joints Relationship Pyramid Mapping (JRPM) |

| [131] | Generative Adversarial Networks (GAN) | Period Energy Image (PEI) |

| [137,140] | Convolutional Neural Networks (CNNs) Long Short-Term Memory (LSTM) | Silhouette Sequences |

| [132] | Self-Adaptive Hidden Markov Model (SAHMM) | Local Gait Energy Image (LGEI) |

| [136] | Convolutional Neural Network (CNN) | Silhouette Sequences |

| [138] | Generative Adversarial Networks (GAN) | Gait Energy Image (GEI) |

| [119] | Localized Grassmann mean representatives | Partial Least Squares Regression (LoGPLS) |

| [121,130] | Localized Grassmann mean representatives | Convolutional Neural Network (CNN) |

It is essential to mention that various features can be fused to improve a person Re-ID system's performance. However, noise and irrelevant information have to be eliminated. Many feature selection and dimension reduction techniques have been carried out in the past to eliminate redundant and irrelevant data collected in feature extraction [142]. Principal component analysis (PCA) is one of the most popular techniques for dimension reduction [83, 99]. Moreover, probabilistic principal component analysis (PPCA) [143] and multilinear principal component analysis (MPCA) [94] have been used in person Re-ID. The authors in [93] even used a fusion of PCA and linear discriminant analysis(LDA) to achieve better accuracy. Recherches in [101] and [102] utilised algorithms such as KL-divergence and Sequential Forward Selection to achieve feature selection.

Although deep learning was theorised in the 1980s, it was not recognised until recently due to two main reasons. Firstly, deep learning algorithms need large quantities of labelled footage or other data. For example, many subjects must be used for training in human recognition before testing the model's accuracy. Additionally, deep learning algorithms need significant computing power. The advancements in parallel architectures for high-performance graphical processing units (HPGPUs) help this issue immensely. These architectures can be combined with clusters or cloud computing to reduce the training time significantly.

Despite the disadvantages mentioned above, there are practical implementations of deep learning in industries such as (1) Automated driving where objects like pedestrians and stop signs are automatically detected, which reduces the possibility of collisions. (2) Medical research, specifically cancer research where deep learning is used to detect cancerous cells in a human body automatically. UCLA researchers build a microprocessor that can detect and analyse 36 million images per second using deep learning and photonic time stretch for cancer diagnosis. (3) Aerospace and defence projects where deep learning satellites are used to identify objects in areas of interest and safe zones for troops deployed in a specific area. (4) Industrial automation, where the workers' safety is improved by detecting the unsafe distance (from the machines) for workers operating heavy machines. (5) Electronics for speech and voice recognition such as voice-assisted tools that translate speech into words or control devices around the house.

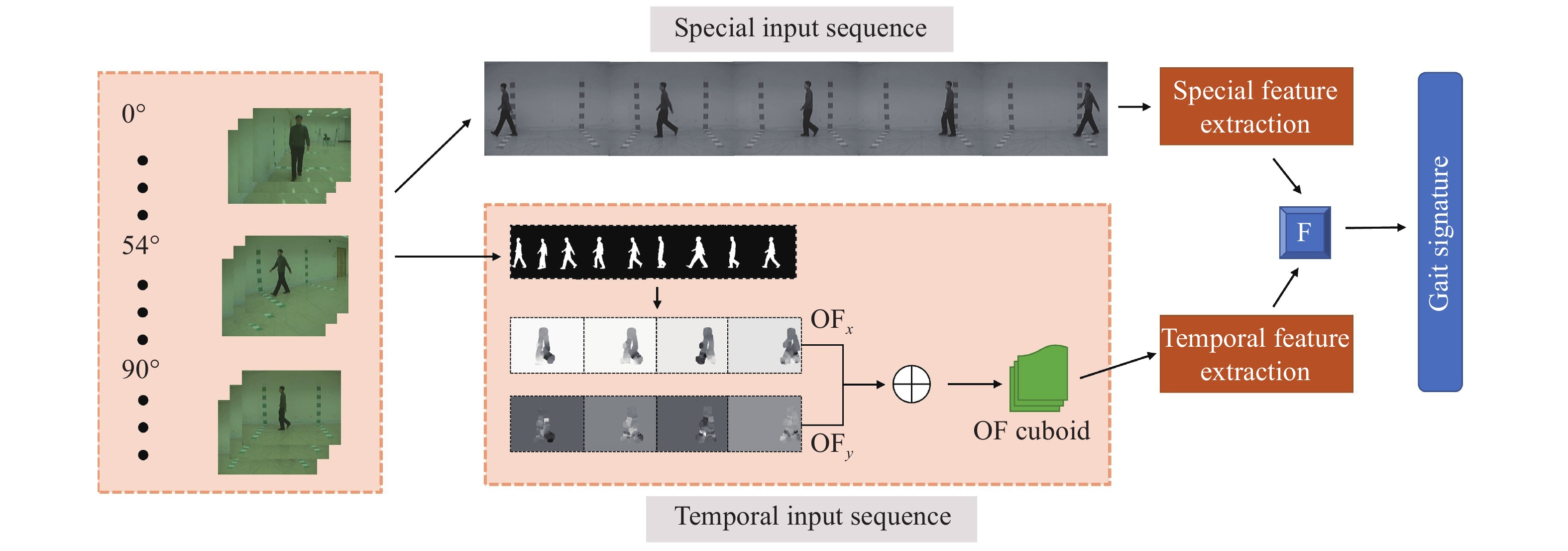

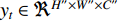

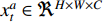

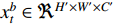

3.4. Multiple Modality Spatial-Temporal Approaches

Fusion of modalities have been used in the past for handcrafted features, optical flow maps, grayscale and silhouette image sequences to improve the robustness of gait recognition methods [144]. Similar feature fusion could be performed on different combinations of modalities and networks like in [145]. In the past, [146] has proved the most promising results for this task. Several types of input modalities are also considered to generate a single gait signature from the input sequences, including handcrafted high-level descriptors, grayscale images, silhouette sequences, and optical flow maps [147]. Moreover, information fusion at different levels has been studied for the final two-stream spatial-temporal CNN architecture. The most challenging part of this approach was consolidating temporal features and preserving them as well as the spatial features for use in high-layered networks without losing information along the way. All modalities were tried out on the range of our proposed architectures and compared against state-of-the-art [148], where attempts were made to fuse two parallel networks at different architectural levels with various fusion techniques and appropriate modalities to improve the results. A very high-level example of this proposed approach can be seen in Figure 7. As can be seen, image sequences of a particular person are fed into the architecture to extract features from two separate modalities, namely optical flow (OF) and grayscale. Using different CNN architectures, they extract a gait signature based on each modality and fused them at the end for use in a classifier.

Figure 7. High-level illustration of our proposed two-stream CNN.

3.4.1. Use of High-Level Descriptors

It is not possible to use low-level descriptors as inputs for an algorithm since they only provide an abundance of information about corners and edges, and in the case of divergence, low-level descriptors only provide information of curl, sheer (DCS) [149] and low-level kinematic motions. Such information is usually summarised as inputs for a support vector machine (SVM) or other classification algorithms.

To find the patterns in the raw data, a clustering method could be employed to create a dictionary of gait signature vectors using the collected patterns. When creating the high-level descriptor, no visual features are considered since the algorithm uses low-level features as input data. Authors such as [150] used fisher vectors (FVs) to create hybrid classification architectures with the help of CNNs. A pyramidal fisher Motion descriptor for Multi-view Gait Recognition was proposed in [117] which had promising results on the SVM classification algorithm. The descriptor uses densely collected local motion features as low-level descriptors and fisher vectors to summarise low-level features so as to create high-level descriptors for the SVM, where an extension of Bag of Words (BOW) [151] was used based on the gaussian mixture model (GMM), and an image representation was computed by a gradient vector using a generative probabilistic model in fisher vectors encoding. In [117], the term fisher motion was used to refer to the high-level descriptor generated from low-level motion descriptors.

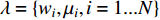

To create high-level descriptors according to the above method, a video clip (  ) can be represented by the below gradient vector equation for

) can be represented by the below gradient vector equation for  low-level descriptors (

low-level descriptors (  ), where,

), where,  is the low-level descriptor independently generated by the Gaussian Mixture Model with

is the low-level descriptor independently generated by the Gaussian Mixture Model with  parameters and

parameters and  is the gradient operator.

is the gradient operator.

With this method, a fisher kernel is calculated to compare two video clips  and

and  .

.  is the Fisher information matrix described in [152]. As

is the Fisher information matrix described in [152]. As  is symmetric and positive, it has a Cholesky decomposition

is symmetric and positive, it has a Cholesky decomposition  and

and  can be rewritten as a dot-product between normalized vectors which is then known as the Fisher Vector of video

can be rewritten as a dot-product between normalized vectors which is then known as the Fisher Vector of video  .

.

In this method, the training set is used to compute a dictionary of patterns obtained with the Gaussian Mixture Model, and then a gradient vector is computed in the dictionary to build a feature vector used in a classification algorithm.

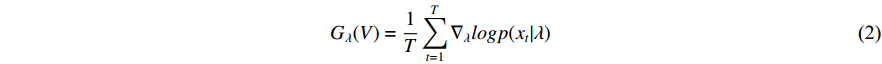

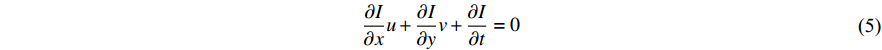

3.4.2. Use of Optical Flow

Features can be extracted based on the assumption that temporal descriptors for Gait could be extracted from optical flow [153] and the fact that CNNs can self-learn features from optical flow maps. Optical flow is essentially the movement of a person from one frame to the next in a sequence of frames. The motion is calculated based on the movement between the camera and the Person, giving us valuable temporal information in a gait sequence. A person's movements can be tracked across consecutive frames and estimate their position and even walking velocity using optical flow. Image intensity  is the basis for calculating optical flow. The optical flow process between two proceeding frame, where image intensity was represented as a function of time and space was presented in [116]. Here,

is the basis for calculating optical flow. The optical flow process between two proceeding frame, where image intensity was represented as a function of time and space was presented in [116]. Here,  is time or frame number and

is time or frame number and  is the position of one pixel in the 2D image. If the same pixel id is displaced by

is the position of one pixel in the 2D image. If the same pixel id is displaced by  in the

in the  direction and

direction and  in the

in the  direction in a period of

direction in a period of  , then the new image could be described as:

, then the new image could be described as:

Hence, the optical flow equation is shown in Euation 5 Where  is horizontal image gradient along the

is horizontal image gradient along the  axis and

axis and  is the vertical image gradient along the

is the vertical image gradient along the  axis and

axis and  is the temporal image gradient. To solve the optical flow equation and determine the motion over time, one just needs to solve

is the temporal image gradient. To solve the optical flow equation and determine the motion over time, one just needs to solve  and

and  . As multiple unknown variables are needed to solve this equation, some algorithms have been proposed to address the issue.

. As multiple unknown variables are needed to solve this equation, some algorithms have been proposed to address the issue.

Optical flow has been used in the past as the input for CNN and archived excellent results for action recognition [154-156]. There are two types of optical flow, namely Sparse-OF and Dense-OF algorithms. The output of a typical Sparse-OF algorithm is vectors which contain information about edges and corners and some other features of the moving object in the frame. In contrast, Dense-OF algorithms produce vectors for all the pixels in the image like the Farneback algorithm [157]. Some traditional implementations of sparse optical flow are Horn-Schunck [158] and Lucas-Kanade [159] algorithms. Most of the techniques rely on energy minimization in a coarse-to-fine framework [160,161] and [162]. A numerical method warps one of the images towards the other from the most coarse level to the finest level and cleans the optical flow with each iteration, however, for motion estimation, normal flow-based methods present a better outcome [163,164]. Deep learning methods such as FLowNet [165], FlowNet2 [166] and LiteFlowNet [167], use CNNs to estimate the optical flow and are the most promising in action recognition problems. An approach was proposed in [148], where they use a stack of optical flow displacement fields for several consecutive frames, much like proposed in [154].

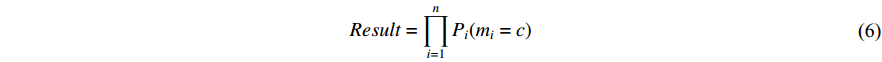

3.4.3. Fusion

Depending on the number of modalities in the above approaches the extracted features by the CNNs have to be combined using fusion to extract a single gait signature. The expectation is that a better classification score can be achieved by fusing more modalities. Two popular fusion methods could be used for this purpose: (1) late fusion and (2) early fusion.

• Late Fusion

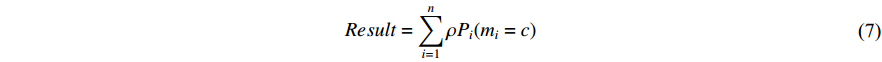

It happens after the softmax layer to combine the output of all the CNNs. For this purpose, we can take the product or the sum of all softmax vectors extracted at the end of each CNN. So if we have  probability vectors at the end of each CNN for

probability vectors at the end of each CNN for  different modalities (two modalities at this point), we can use any of the two equations below to produce a result vector that shows the probability of the Person in the sequence

different modalities (two modalities at this point), we can use any of the two equations below to produce a result vector that shows the probability of the Person in the sequence  having the same identity as the Person in class c. The symbol

having the same identity as the Person in class c. The symbol  represents a weight related to the modality and is assigned based on experiments.

represents a weight related to the modality and is assigned based on experiments.

The two-stream architecture in [154] employed late fusion, but since the fusion happens using the softmax layers, it neglects the correlation between temporal and spatial features at a pixel level. Also, since spatial CNN works on one image at a time and temporal CNN on a stack of ten optical flow images, a lot of the temporal information is ignored.

• Early Fusion

On the other hand, early fusion can happen at any layer of the CNN architecture as long as it is before the softmax layer. If the fusion is performed in a convolutional layer, the descriptor is a matrix, and if it is performed on a fully connected layer, it is a vector. Fusing at a convolutional layer is only possible if the two networks have the same spatial resolution at the location of the layers intended to be fused. We can stack (overlay) the layers from one network to the other so the channel responses at the same pixels in the temporal and spatial stream can correspond. If we presume that (1) separate channels in the spatial stream are responsible for different parts of the human body (leg, foot, head), and (2) a channel in the temporal stream is in charge of the motion information (moving the foot forward), then after stacking the channels, the kernels in the next layer needs to learn this correspondence as weights.

There are several ways of fusing layers between two networks. If  is a fusion function that fuses

is a fusion function that fuses  and

and  which are two feature maps at time

which are two feature maps at time  , then

, then  has the output of

has the output of  for

for  and

and  . The width, height and channel numbers are represented as

. The width, height and channel numbers are represented as  ,

,  and

and  respectively and the fusion function is represented as:

respectively and the fusion function is represented as:

To decide where and how to perform the fusion, we first go through a range of feasible methods introduced in the literature. We assume the same dimensions for  ,

,  and

and  as we discussed before. So for

as we discussed before. So for  , sum fusion sums the feature maps at the same location in space indicated by

, sum fusion sums the feature maps at the same location in space indicated by  ,

,  and, where

and, where  is the number of channels.

is the number of channels.

Since the channel numbers are chosen at random, the correspondence between the networks is arbitrary. Therefore, more training is necessary over the following layers to make this correspondence helpful. The same rule applies to MAX fusion which takes the maximum of two feature maps.

Concatenation fusion stacks the feature maps at location  for channels

for channels  and outputs

and outputs  , but it does not define correspondence, so they need to be defined in the next layers by learning new kernels.

, but it does not define correspondence, so they need to be defined in the next layers by learning new kernels.

The feature maps can be stacked as shown and succeeded by a convolution on the result with a kernel  and bias of

and bias of  , to solve the problem of concatenation fusion. Note that the number of output channels is C and the dimensions for the kernel are

, to solve the problem of concatenation fusion. Note that the number of output channels is C and the dimensions for the kernel are  . For early fusion in the fully connected layers of 2D and 3D CNNs and also fusion of the gait signatures obtained by each modality, we perform a concatenation operation followed by another three fully connected layers with dropout before the softmax layer. This fusion is best performed after the last fully connected layer since the signature is already extracted at that point. In ResNet, we will not add fully connected layers after concatenation since it might lead to overfitting. Note that the Relu function is used for all activations in our networks, so for the extra layers, it remains the same.

. For early fusion in the fully connected layers of 2D and 3D CNNs and also fusion of the gait signatures obtained by each modality, we perform a concatenation operation followed by another three fully connected layers with dropout before the softmax layer. This fusion is best performed after the last fully connected layer since the signature is already extracted at that point. In ResNet, we will not add fully connected layers after concatenation since it might lead to overfitting. Note that the Relu function is used for all activations in our networks, so for the extra layers, it remains the same.

Adding fusion significantly affects the number of parameters used in the computation. Moreover, we also need to consider the fusion process over time (temporally). Our first consideration is to average all the feature maps  over time

over time  which was used in [154]. By this way, only two-dimensional pooling is possible, which loses lots of temporal information. Next, we consider using 3D pooling [168] on a stack of feature maps

which was used in [154]. By this way, only two-dimensional pooling is possible, which loses lots of temporal information. Next, we consider using 3D pooling [168] on a stack of feature maps  over time

over time  . This layer applies max pooling to a stack of data of size

. This layer applies max pooling to a stack of data of size  . It is also possible to perform a convolution with a fusion kernel before performing 3D pooling, much like [169]. This fusion technique is illustrated in [148].

. It is also possible to perform a convolution with a fusion kernel before performing 3D pooling, much like [169]. This fusion technique is illustrated in [148].

3.5. Spatial-Temporal Attention Approaches

As video surveillance becomes more widespread and video data becomes more available, the need for a better and more robust person Re-ID framework becomes more evident. Furthermore, pedestrians in surveillance videos are the central area of concern in real-world security systems. Hence, new challenges are presented to the research community to identify a person of interest and understand human motion. Our study on the human Gait provides a non-invasive and robust way of feature extraction which can be used for gait recognition in surveillance systems in a remote and non-invasive manner.

In real-world scenarios, the subjects passing through an elaborate surveillance network cannot be expected to act predictably. We might not even get a complete gait cycle from a person of interest in most cases. Clothing variation or carry bags considerably impact the system's performance in re-identification problems. Other abnormalities, including the camera angle, significantly aggravate the intra-class variation. Moreover, the similarity between the gait appearances of different people extracted from low-level information introduces inter-class variations, resulting in similar gait signatures in more complex cases.

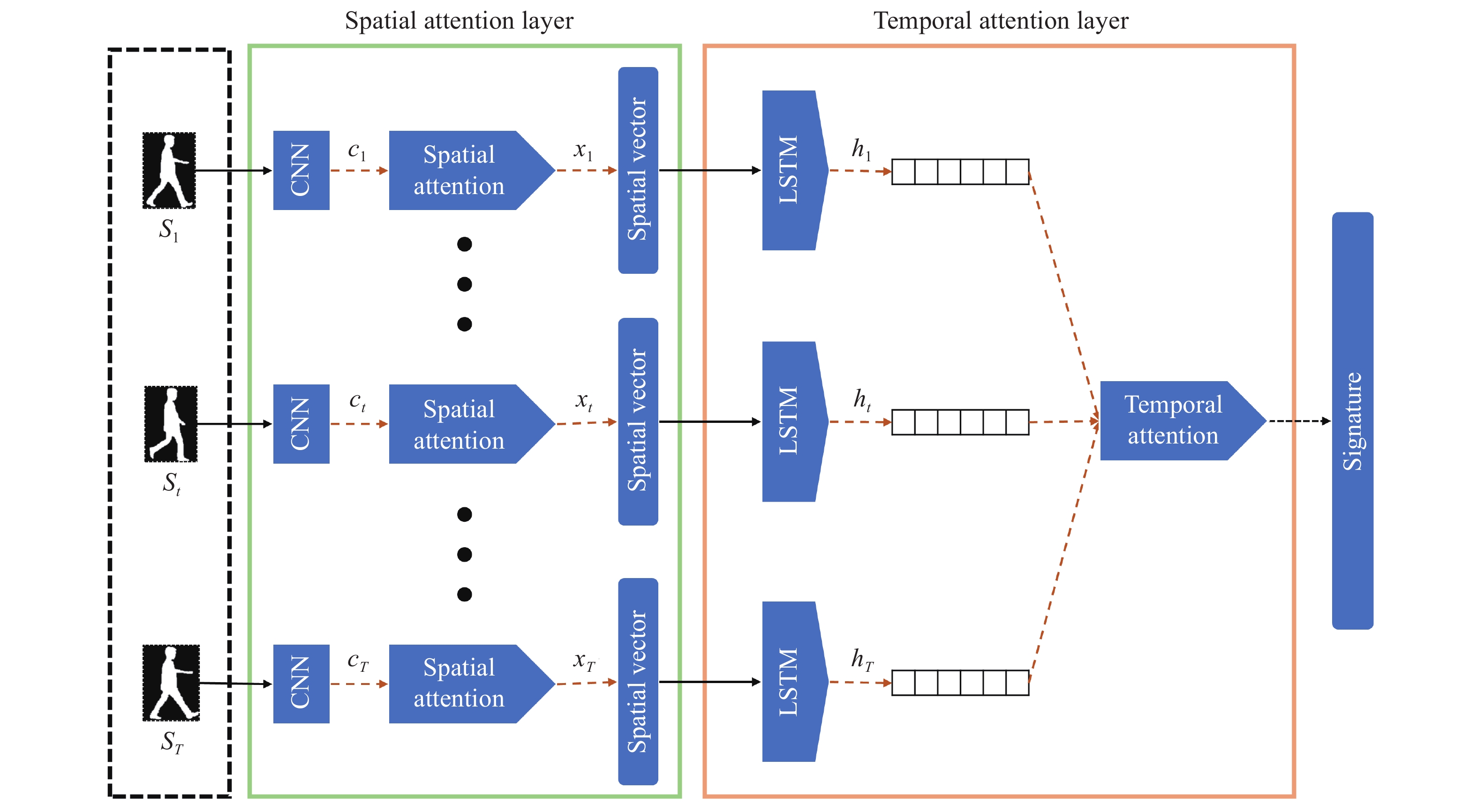

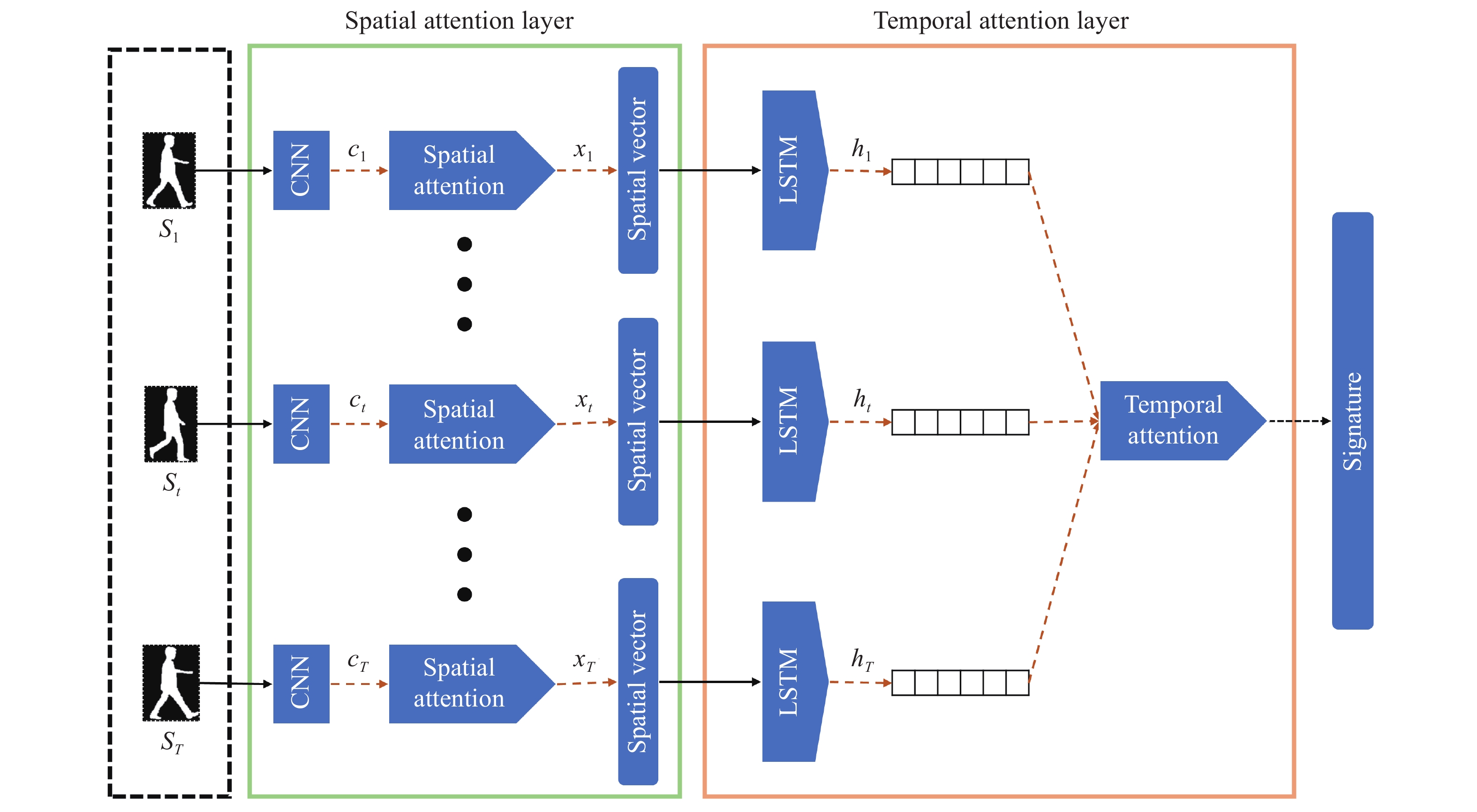

Unfortunately, most existing methods use shallow motion cues by employing GEIs to represent temporal data, leading to the loss of a vast amount of dynamic information. Although it has been tried in the literature [120,121], [170] to create a deep learning-based gait recognition option that can robustly extract gait features, at least one complete gait cycle needs to be detected, and this is not robust to the viewpoint changes. Therefore, irregular gait recognition concerning viewpoint variations still need particular attention.