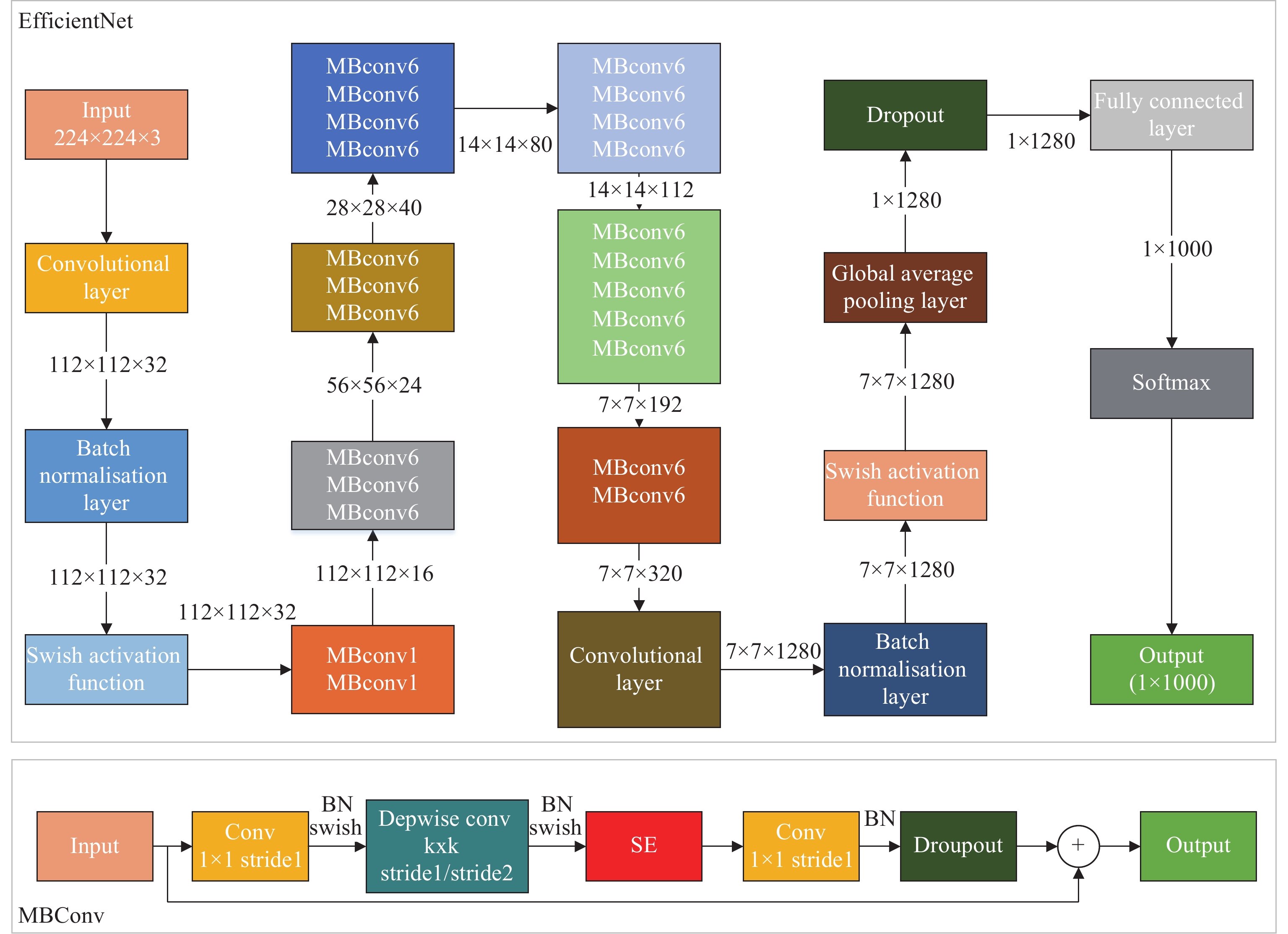

Traffic detection devices can cause accuracy degradation over time. Considering problems such as time-consuming and laborious manual statistics, high misdetection probabilities, and model tracking failures, there is an urgent need to develop a deep learning model (which can stably achieve detection accuracy over 90%) to evaluate whether the device accuracy still satisfies the requirements or not. In this study, based on dual-granularity classification, a real-time traffic flow statistics method is proposed to address the above problems. The method is divided into two stages. The first stage uses YOLOv5 to acquire all the motorized and non-motorized vehicles appearing in the scene. The second stage uses EfficientNet to acquire the motorized vehicles obtained in the previous stage and classify such vehicles into six categories. Through this dual-granularity classification, the considered problem is simplified and the probability of false detection is reduced significantly. To correlate the front and back frames of the video, vehicle tracking is implemented using DeepSORT, and vehicle re-identification is implemented in conjunction with the ResNet50 model to improve the tracking accuracy. The experimental results show that the method used in this study solves the problems of misdetection and tracking effectively. Moreover, the proposed method achieves 98.7% real-time statistical accuracy by combining the two-lane counting method.

- Open Access

- Article

Real-Time Traffic Flow Statistics Based on Dual-Granularity Classification

- Yanchao Bi,

- Yuyan Yin,

- Xinfeng Liu *,

- Xiushan Nie,

- Chenxi Zou,

- Junbiao Du

Author Information

Received: 17 Jun 2023 | Accepted: 13 Sep 2023 | Published: 26 Sep 2023

Abstract

Graphical Abstract

Keywords

dual-granularity classification | vehicle re-identification | traffic | multi-objective tracking algorithm | dual-lane counting

References

- 1.Tan, M.X.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, USA, 9–15 June 2019; ICML: Honolulu, 2019; pp. 6105–6114.

- 2.Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: New York, 2017; pp. 3645–3649. doi:10.1109/ICIP.2017.8296962

- 3.He, K.M.; Zhang, X.Y.; Ren, S.Q.; et al. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 27–30 June 2016; IEEE: New York, 2016; pp. 770–778. doi:10.1109/CVPR.2016.90

- 4.Li, Y.S.; Ma, R.G.; Zhang, M.Y. Traffic monitoring video vehicle volume statistics method based on improved YOLOv5s+DeepSORT. Comput. Eng. Appl., 2022, 58: 271−279.

- 5.Jin, L.S.; Hua, Q.; Guo, B.C.; et al. Multi-target tracking of vehicles based on optimized DeepSort. J. Zhejiang Univ. (Eng. Ed.) 2021, 55, 1056–1064. doi:10.3785/j.issn.1008.973X.2021.06.005

- 6.Jia, Z.; Li, M.J.; Li, W.T. Real-time vehicle detection at intersections based on improved YOLOv5+DeepSort algorithm model. Comput. Eng. Sci., 2023, 45: 674−682.

- 7.Kumar, S.; Jailia, M.; Varshney, S.; et al. Robust vehicle detection based on improved you look only once. Comput., Mater. Continua, 2022, 74: 3561−3577.

- 8.Zhang, W.L.; Nan, X.Y. Road vehicle tracking algorithm based on improved YOLOv5. J. Guangxi Norm. Univ. (Nat. Sci. Ed.) 2022, 40, 49–57. doi:10.16088/j.issn.1001-6600.2021081303

- 9.Gai, Y.Q.; He, W.Y.; Zhou, Z.L. Pedestrian target tracking based on DeepSORT with YOLOv5. In 2021 2nd International Conference on Computer Engineering and Intelligent Control (ICCEIC), Chongqing, China, 12–14 November 2021; IEEE: New York, 2021; pp. 1–5. doi:10.1109/ICCEIC54227.2021.00008

- 10.Zhou, X. A deep learning-based method for automatic multi-objective vehicle trajectory acquisition. Trans. Sci. Technol. 2021, 135–140, 144.

- 11.Zhang, Q. Multi-object trajectory extraction based on YOLOv3-DeepSort for pedestrian-vehicle interaction behavior analysis at non-signalized intersections. Multimedia Tools Appl., 2023, 82: 15223−15245.

- 12.Chen, X.W.; Jia, Y.P.; Tong, X.Q; et al. Research on pedestrian detection and DeepSort tracking in front of intelligent vehicle based on deep learning. Sustainability, 2022, 14: 9281.

- 13.Zhan, W.; Sun, C.F.; Wang, M.C.; et al. An improved yolov5 real-time detection method for small objects captured by UAV. Soft Comput., 2022, 26: 361−373.

- 14.Ye, L.L.; Li, W.D.; Zheng, L.X.; et al. Multiple object tracking algorithm based on detection and feature matching. J. Huaqiao Univ. (Nat. Sci.) 2021, 42, 661–669. doi:10.11830/ISSN.1000-5013.202105018

- 15.Wang, S.; Wang, Q.; Min, W.D.; et al. Trade-off background joint learning for unsupervised vehicle re-identification. Visual Comput., 2023, 39: 3823−3835.

- 16.Wang, N.T.; Wang, S.Q.; Tang, L.; et al. Insulator defect detection based on EfficientNet-YOLOv5s network. J. Hubei Univ. Technol., 2023, 38: 21−26.

- 17.Zhang, K.J.; Wang, C.; Yu, X.Y.; et al. Research on mine vehicle tracking and detection technology based on YOLOv5. Syst. Sci. Control Eng., 2022, 10: 347−366.

- 18.Zhang, X.; Hao, X.Y.; Liu, S.L.; et al. Multi-target tracking of surveillance video with differential YOLO and DeepSort. In Proceedings of the SPIE 11179, Eleventh International Conference on Digital Image Processing, Guangzhou, China, 14 August 2019; SPIE: San Francisco, 2019; pp. 701–710. doi:10.1117/12.2540269

- 19.Li, M.A.; Zhu, H.J.; Chen, H.; et al. Research on object detection algorithm based on deep learning. In Proceedings of 2021 3rd International Conference on Computer Modeling, Simulation and Algorithm, Shanghai, China, 4–5 July 2021; IOP, 2021; p. 012046. doi:10.1088/1742-6596/1995/1/012046

- 20.Wen, N.; Guo, R.Z.; He, B. Multi-lane vehicle counting based on DCN-Mobile-YOLO model. J. Shenzhen Univ. (Sci. Eng.) 2021, 38, 628–635. doi:10.3724/SP.J.1249.2021.06628

- 21.Dai, J.H.; Guo, J.S. Video-based vehicle flow detection and counting for multi-lane roads. Foreign Electron. Meas. Technol., 2016, 35: 30−33.

How to Cite

Bi, Y.; Yin, Y.; Liu, X.; Nie, X.; Zou, C.; Du, J. Real-Time Traffic Flow Statistics Based on Dual-Granularity Classification. International Journal of Network Dynamics and Intelligence 2023, 2 (3), 100013. https://doi.org/10.53941/ijndi.2023.100013.

RIS

BibTex

Copyright & License

Copyright (c) 2023 by the authors.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Contents

References