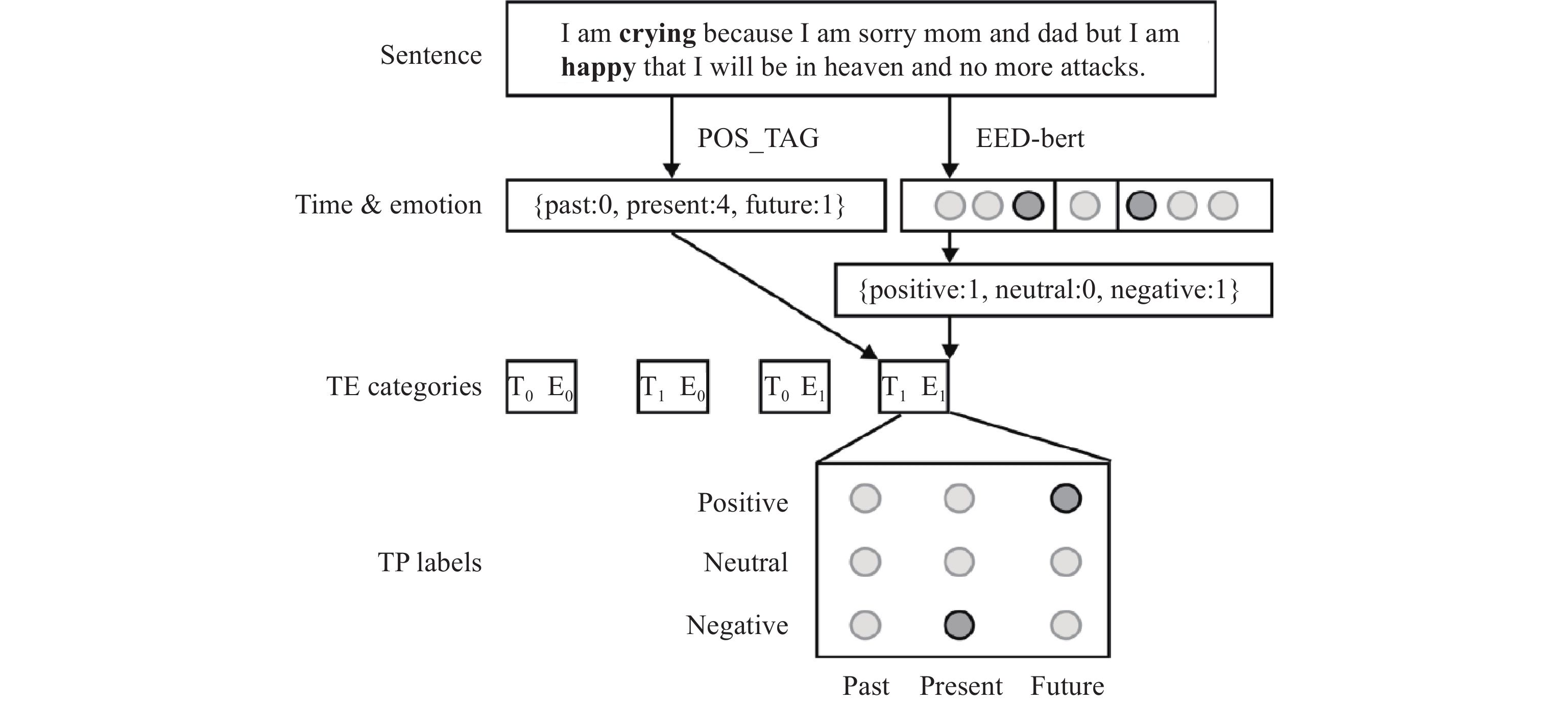

Suicide notes are written documents left behind by suicide victims, either on paper or on social media, and can help us understand the mentality and thought processes of those struggling with suicidal thoughts. In our preliminary work, we have proposed the use of Time Perspective (TP), which takes into consideration how people think of or appraise their past, present, or future life would shape their behavior, in suicide tendency detection based on suicide notes. The detection result is highly dependent upon a ternary classification task that groups any suicide tendency into one of the three pre-defined types. In this work, we define the suicidal emotion trajectory, a concept that is based on TP and used for depicting the dynamic evolution of an individual's emotional state over time, and this trajectory serve as an auxiliary task to the primary ternary classification task for a multi-task learning model, i.e. TP-MultiBert. The model features Bidirectional Encoder Representation from Transformer (BERT) components, replacing its counterpart, i.e., GloVe, in the previous model. Thanks to its desirable capability of understanding word contextual relationships, as well as multi-task learning capability of leveraging complementary information from various tasks, BERT shows promissing results in further improving the performance of suicide ideation detection.

- Open Access

- Article

Time Perspective-Enhanced Suicidal Ideation Detection Using Multi-Task Learning

- Qianyi Yang 1,

- Jing Zhou 1, *,

- Zheng Wei 2

Author Information

Received: 25 Oct 2023 | Accepted: 05 Mar 2024 | Published: 26 Jun 2024

Abstract

Graphical Abstract

Keywords

suicide tendency | Time Perspective (TP) | suicidal emotion trajectory | multi-task learning | BERT

References

- 1.World Health Organization. World suicide prevention day 2022. Available online: https://www.who.int/campaigns/world-suicide-prevention-day/2022 (accessed on 21 March 2023).

- 2.Lee, D.; Kang, M.; Kim, M.;

et al . Detecting suicidality with a contextual graph neural network. InProceedings of the Eighth Workshop on Computational Linguistics and Clinical Psychology, Seattle, USA, July 2022 ; ACL, 2022; pp 116–125. doi: 10.18653/v1/2022.clpsych-1.10 - 3.Shaygan, M.; Hosseini, F.A.; Shemiran, M.; et al. The effect of mobile-based logotherapy on depression, suicidal ideation, and hopelessness in patients with major depressive disorder: A mixed-methods study. Sci. Rep., 2023, 13: 15828. doi: 10.1038/s41598-023-43051-8

- 4.Tadesse, M.M.; Lin, H.F.; Xu, B.; et al. Detection of suicide ideation in social media forums using deep learning. Algorithms, 2019, 13: 7. doi: 10.3390/a13010007

- 5.Yang, Q.Y.; Zhou, J. Incorporating time perspectives into detection of suicidal ideation. In

Proceedings of the 2023 IEEE Smart World Congress (SWC ),Portsmouth, UK, 28–31 August 2023 ; IEEE: New York, 2023; pp. 1–8. doi: 10.1109/SWC57546.2023.10448961 - 6.Lewin, K.

Field theory in Social Science: Selected Theoretical Papers ; Harper & Brothers: New York, 1951. - 7.Manning, C.D.; Schütze, H.

Foundations of Statistical Natural Language Processing ; MIT Press: Cambridge, 1999. - 8.Hartmann, J. Emotion English Distilroberta-base. Available online: https://huggingface.co/j-hartmann/emotion-english-distilroberta-base/ (accessed on 12 September 2023).

- 9.Law, K.C.; Khazem, L.R.; Anestis, M.D. The role of emotion dysregulation in suicide as considered through the ideation to action framework. Curr. Opin. Psychol., 2015, 3: 30−35. doi: 10.1016/j.copsyc.2015.01.014

- 10.Rajappa, K.; Gallagher, M.; Miranda, R. Emotion dysregulation and vulnerability to suicidal ideation and attempts. Cogn. Ther. Res., 2012, 36: 833−839. doi: 10.1007/s10608-011-9419-2

- 11.Everall, R.D.; Bostik, K.E.; Paulson, B.L. Being in the safety zone: Emotional experiences of suicidal adolescents and emerging adults. J. Adolesc. Res., 2006, 21: 370−392. doi: 10.1177/0743558406289753

- 12.Heffer, T.; Willoughby, T. The role of emotion dysregulation: A longitudinal investigation of the interpersonal theory of suicide. Psychiatry Res., 2018, 260: 379−383. doi: 10.1016/j.psychres.2017.11.075

- 13.Caruana, R. Multitask learning. Mach. Learn., 1997, 28: 41−75. doi: 10.1023/A:1007379606734

- 14.Li, N.; Chow, C.Y.; Zhang, J.D. SEML: A semi-supervised multi-task learning framework for aspect-based sentiment analysis. IEEE Access, 2020, 8: 189287−189297. doi: 10.1109/ACCESS.2020.3031665

- 15.Liu, P.F.; Qiu, X.P.; Huang, X.J. Recurrent neural network for text classification with multi-task learning. In

Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, USA, 9–15 July 2016 ; IJCAI, 2016; pp. 2873–2879. - 16.He, K.M.; Gkioxari, G.; Dollár, P.;

et al . Mask R-CNN. InProceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017 ; IEEE: New York, 2017; pp 2980–2988. doi: 10.1109/ICCV.2017.322 - 17.Hadash, G.; Shalom, O.S.; Osadchy, R. Rank and rate: Multi-task learning for recommender systems. In

Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, Canada, September 2018 ; ACM: New York, 2018; pp 451–454. doi: 10.1145/3240323.3240406 - 18.Ghosh, S.; Ekbal, A.; Bhattacharyya, P. A multitask framework to detect depression, sentiment and multi-label emotion from suicide notes. Cogn. Comput., 2022, 14: 110−129. doi: 10.1007/s12559-021-09828-7

- 19.Benton, A.; Mitchell, M.; Hovy, D. Multi-task learning for mental health using social media text. arXiv: 1712.03538, 2017. doi:10.48550/arXiv.1712.03538

- 20.Yang, T.T.; Li, F.; Ji, D.H; et al. Fine-grained depression analysis based on Chinese micro-blog reviews. Inf. Process. Manag., 2021, 58: 102681. doi: 10.1016/j.ipm.2021.102681

- 21.Ghosh, S.; Ekbal, A.; Bhattacharyya, P. Deep cascaded multitask framework for detection of temporal orientation, sentiment and emotion from suicide notes. Sci. Rep., 2022, 12: 4457. doi: 10.1038/s41598-022-08438-z

- 22.Ghosh, S.; Ekbal, A.; Bhattacharyya, P. VAD-assisted multitask transformer framework for emotion recognition and intensity prediction on suicide notes. Inf. Process. Manag., 2023, 60: 103234. doi: 10.1016/j.ipm.2022.103234

- 23.Ranade, A.; Telge, S.; Mate, Y. Predicting disasters from tweets using GloVe Embeddings and BERT layer classification. In

11th International Conference on Advanced Computing, Msida, Malta, 18–19 December 2021 ; Springer: Berlin/Heidelberg, Germany, 2022; pp 492–503. doi: 10.1007/978-3-030-95502-1_37 - 24.Kaushal, A.; Mahowald, K. What do tokens know about their characters and how do they know it? In

Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, USA, July 2022 ; ACL, 2022; pp. 2487–2507. doi: 10.18653/v1/2022.naacl-main.179 - 25.Jha, A.; Kumar, A.; Banerjee, B.;

et al . AdaMT-Net: An adaptive weight learning based multi-task learning model for scene understanding. InProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, USA, 14 –19 June 2020 ; IEEE: New York, 2020; pp. 3027–3035. doi: 10.1109/CVPRW50498.2020.00361 - 26.Mohammadi, E.; Amini, H.; Kosseim, L. CLaC at CLPsych 2019: Fusion of neural features and predicted class probabilities for suicide risk assessment based on online posts. In

Proceedings of the Sixth Workshop on Computational Linguistics and Clinical Psychology, Minneapolis, Minnesota, June 2019 ; ACL, 2019; pp. 34–38. doi: 10.18653/v1/W19-3004 - 27.Du, J.C.; Zhang, Y.Y.; Luo, J.H.; et al. Extracting psychiatric stressors for suicide from social media using deep learning. BMC Med. Inf. Decis. Mak., 2018, 18: 43. doi: 10.1186/s12911-018-0632-8

- 28.Gao, M.X.; Wong, N.M.L.; Lin, C.M.; et al. Multimodal brain connectome-based prediction of suicide risk in people with late-life depression. Nat. Mental Health, 2023, 1: 100−113. doi: 10.1038/s44220-022-00007-7

- 29.Garg, M. Mental health analysis in social media posts: A survey. Arch. Comput. Methods Eng., 2023, 30: 1819−1842. doi: 10.1007/s11831-022-09863-z

- 30.Zeng, N.Y.; Wu, P.S.; Wang, Z.D.; et al. A small-sized object detection oriented multi-scale feature fusion approach with application to defect detection. IEEE Trans. Instrum. Meas., 2022, 71: 3507014. doi: 10.1109/TIM.2022.3153997

- 31.Wu, P.S.; Wang, Z.D.; Li, H.; et al. KD-PAR: A knowledge distillation-based pedestrian attribute recognition model with multi-label mixed feature learning network. Expert Syst. Appl., 2024, 237: 121305. doi: 10.1016/j.eswa.2023.121305

- 32.Li, H.; Wang, Z.D.; Lan, C.B.;

et al . A novel dynamic multiobjective optimization algorithm with non-inductive transfer learning based on multi-strategy adaptive selection.IEEE Trans. Neural Netw. Learn. Syst .2023 , in press. doi:10.1109/TNNLS.2023.3295461

How to Cite

Yang, Q.; Zhou, J.; Wei, Z. Time Perspective-Enhanced Suicidal Ideation Detection Using Multi-Task Learning. International Journal of Network Dynamics and Intelligence 2024, 3 (2), 100011. https://doi.org/10.53941/ijndi.2024.100011.

RIS

BibTex

Copyright & License

Copyright (c) 2024 by the authors.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Contents

References