Downloads

Download

This work is licensed under a Creative Commons Attribution 4.0 International License.

Survey/review study

Is High-Fidelity Important for Human-like Virtual Avatars in Human Computer Interactions?

Qiongdan Cao, Hui Yu*, Paul Charisse, Si Qiao, and Brett Stevens

1 University of Portsmouth, Portsmouth, PO1 2UP, UK

* Correspondence: hui.yu@port.ac.uk

Received: 7 February 2023

Accepted: 6 March 2023

Published: 27 March 2023

Abstract: As virtual avatars have become increasingly popular in recent years, current needs indicate that “interactivity” is crucial for inducing a positive response from users towards these avatars, especially in human computer interactions (HCI). This paper reviews recent works on high-fidelity human-like virtual avatars (e.g. high visual and motion fidelity) and discusses the critical question——“Is high-fidelity a positive choice for virtual avatars to achieve better interactions in HCI”. Furthermore, we summarise current technical approaches to developing those virtual avatars. We investigate the advantages and disadvantages of high-fidelity virtual avatars in different areas focusing on addressing the effect of motion, especially the upper body. Research shows that high-fidelity is a positive choice for virtual avatars, although it may depend on the application.

Keywords:

virtual avatars high-fidelity appearance facial expression human computer interactions1. Introduction

The rapid development of technologies makes the production of virtual avatars more efficient and more accessible, which results in the wide use of virtual avatars in different areas. Compared with virtual avatars in the last two decades, current techniques, such as high-quality rendering engines [1] and motion capture systems [2], have significantly improved the fidelity of virtual avatars, which makes virtual avatars nowadays look more human not only in their appearance but also in their motion and intelligence.

High realism, also called high-fidelity, has been suggested as a positive choice for virtual avatars [3]. Users show higher intentions for interacting with virtual avatars with high-fidelity human-like features than a non-human-like virtual avatar [4,5]. These avatars with high fidelity appearances are fast becoming popular in films, games and other applications such as online social applications. Also, research indicates that these avatars can positively influence user engagement [6] and enjoyment by providing the feeling of familiarity, which can evoke the same feeling that people usually have in real life.

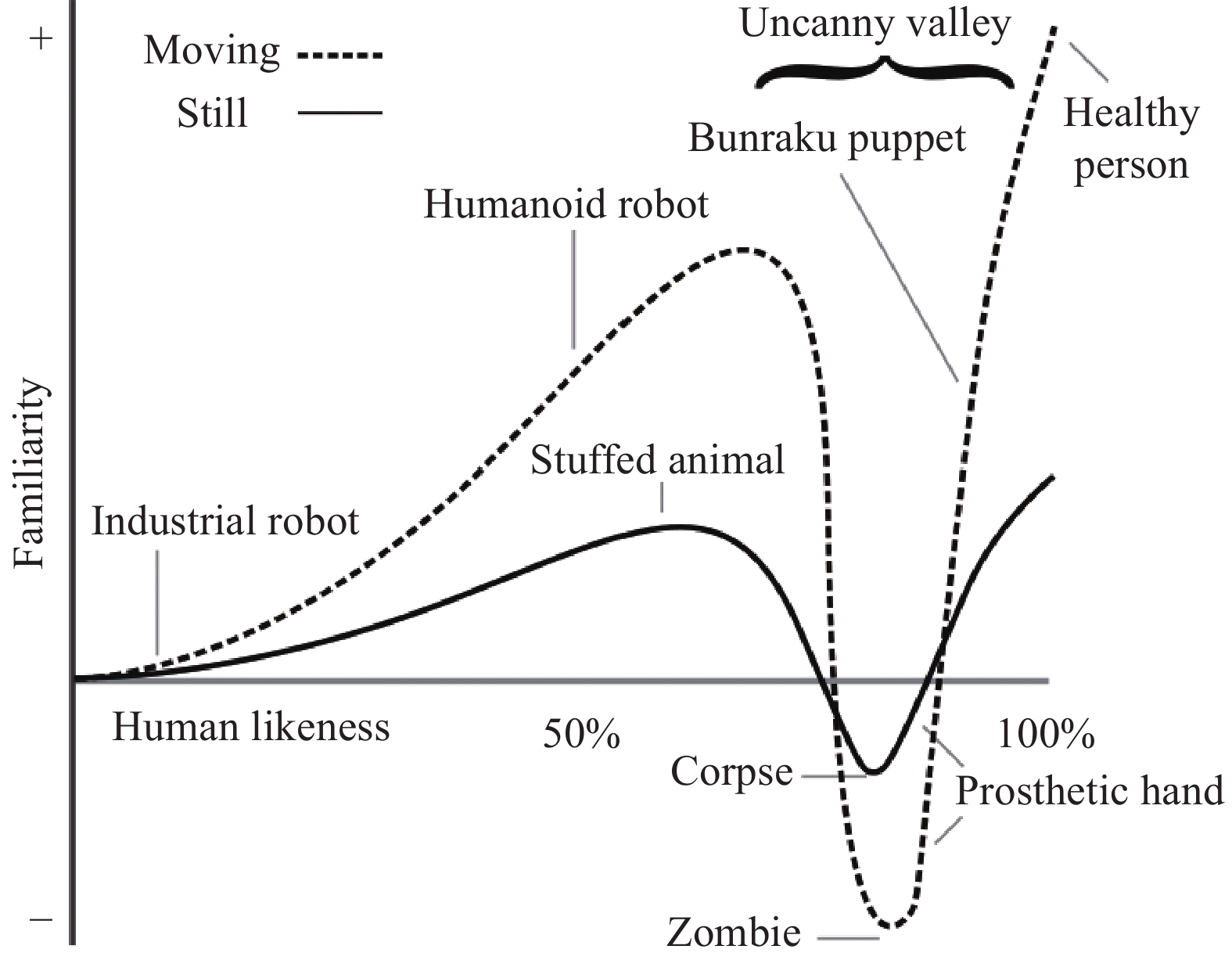

Despite all the benefits mentioned above, the disadvantage of high-fidelity virtual avatars could be better mitigated. It has been critically discussed that the more human-like a virtual avatar presents, up to a certain degree, the creepier it might seem. This idea was first reported as the Uncanny Valley [7]. Logically, the affinity between viewers and a human-like avatar would keep increasing if the avatar's realism rises simultaneously. However, there is a point, where the affinity between viewers and the avatar drops (Figure 1) and stays suppressed (in a valley) until the realism of the avatar increases past a second point, and this is thought to be similar to a healthy person’s level in real life. Avatars with high-fidelity human-like appearances, but that bring creepy feelings are called “uncanny”. Researchers have explored the uncanny valley based on conducting experiments for virtual avatars in different areas [8,9], while others still doubt the existence of the uncanny valley [10]. However, it is widely accepted that there are intense demands for using high-fidelity human-like virtual avatars in some areas, such as medical training [11] and spatial perception [12] in a virtual reality environment, as people find user experience is significantly increased while interacting with high-fidelity virtual avatars in these applications. Accompanying the rapid developments in technologies and theories, it is possible to create human-like virtual avatars with a high fidelity, and above the “uncanny” threshold.

Figure 1. The uncanny valley theory.

Increasing the fidelity of virtual avatars isn’t the only way to avoid the “uncanny”, as reduced fidelity can also help reduce the effect, based on the uncanny valley theory itself. On the other hand, high-fidelity may not be the best choice for virtual avatars in some applications, such as applications that don’t require fidelity but focus on other aspects to induce positive user experience; for example, cartoon-style virtual avatars that are less human like a popular alternative for children as they may find high-fidelity virtual avatars scary; these avatars in certain programmes such as Japanese anime and Disney movies are also popular among adults as people find them more appealing with a cartoon-style. Therefore, high-fidelity virtual avatars are not always the optimal choice and thus the area is worth exploring.

This paper reviews the most recent literature to discuss the importance of high-fidelity representations for human-like virtual avatars through different aspects, across multidisciplinary fields to provide general advice on character design. We first investigate the effect of appearance on high-fidelity human-like virtual avatars in a broad area. As it has been argued that virtual characters with motion applied can quickly draw attention from viewers and improve user engagement. Thus, the effect of character motion, especially facial expression, on high-fidelity virtual avatars becomes the focus of this study. Also, we discuss the behavioral intelligence of those virtual avatars in different areas to address their current roles in human computer interactions (HCI). In addition, we summarise some practical approaches to improving the fidelity for virtual avatars. Unlike other review papers that only track the factors directly relative to virtual avatars, we also discuss the most recent deep-learning-based technologies on system development that are not widely used currently in the development of virtual avatars, but have great potentials to be utilised in the future. Ultimately, we provide some general avatar design methods based on the literature explored.

2. The Appearance of High-Fidelity Human-Like Virtual Avatars

Human-like avatars are high-fidelity in appearance and performance in video games, such as Elden Ring [13], that have attracted attention worldwide. Research on the emotion and fidelity of avatars has developed rapidly in recent years [14-19]. The vibrant use of high-fidelity avatars is not only happening in games but also permeating various kinds of research areas such as medical training [20], education [21], and cultural heritage applications [22,23].

Previous works compared different fidelity levels of a human-like virtual avatars in simulated inter-personal experiences. They discovered that less negative effect is reported from the participants when interacting with avatars with higher levels of fidelity than avatars with lower fidelity levels. Also, avatars with a higher fidelity in appearance are more effective in inducing emotional responses [11]. Further, it has been shown that people with social phobia feel less stress while interacting with high-level human likeness avatars, but gain more anxiety from virtual avatars with low fidelity, which demonstrates some of the advantage of utilising high-fidelity appearance avatars in therapy applications [24]. Moreover, compared to robot-like avatars and zombie avatars, with few real human features in appearance, research shows that high-fidelity human-like avatars (similar to a real human) have a minor effect on avoidance movement behaviour [25]. This indicates that people tend to avoid interacting with virtual avatars which are less human-like in appearance.

Even though studies show that high-fidelity human-like virtual avatars are primarily positive, while creating one’s avatar in social applications, most people prefer using middle-fidelity avatars as their character rather than a high-fidelity virtual avatar with a one-to-one recreation of themselves [26]. This counteracts studies suggest that users (who expect to feel more present) prefer having high-fidelity avatars embodied with their appearances [5,27]. Recent case studies indicate high-fidelity isn’t always the best choice during stage performance [28]. Similarly, experiments report that Cartoon-like avatars are preferred rather than high-fidelity human-like avatars to subjects, as subjects find cartoon-like avatars make it easier to manipulate the facial expression and more appropriately to represent themselves [29]. As long as the possibility for high-fidelity avatars feeling uncanny exists [5], it is essential to balance the design between high-fidelity and the “uncanny” for avatar appearance.

3. The Effect of Motion on High-Fidelity Human-Like Virtual Avatars

Similar to the appearance, the motion applied to high-fidelity human-like virtual avatars is equally crucial. Research shows that subjects have less motivation for movement while embodied with a static avatar [30]. It is suggested that motion applied to virtual avatars can increase user enjoyment and other feelings during social interactions in virtual reality (VR) [31]. Moreover, no significant difference was found in user enjoyment when interacting with a fully animated human-like virtual avatar in VR compared to a face to face real life interaction. However, the face to face interaction is preferred when only limited motion is applied to these virtual avatars. While most of the studies indicate that movement of the upper body usually draws more attention from people than movement of the other parts of the human body, it is demonstrated that the lack of facial expression can easily cause a high-fidelity avatar to be uncanny [32]. This paper thus reviews studies investigating the effect of motion with focus on the upper body: facial expression; eye gaze; and head motion [33], on high-fidelity human-like virtual avatars.

3.1. Facial Expression

Facial expression, often combined with emotional content, is a crucial feature for non-verbal communication in our daily life. However, some studies reveal that facial expression is not culturally universal from both the categorial and motion perspectives [34]. Recently, increasing research indicates the critical role of facial expression, mainly when applied to human-like virtual avatars, for enhancing motion fidelity and conveying emotions to aid user experience. Some support that subjects spend more time observing high-fidelity virtual avatars with facial expression and have stronger empathic connections with these animated avatars than those who are motionless. At the same time, subjects show maximum self-recognition of avatars’ expressive face animation, even if the animation is not manipulatable by the subjects [35]. Other reports that adding emotional facial expression to a human-like virtual avatar significantly improve user engagement in games [36]. For instance, studies find that in a VR volleyball game, user engagement and user experience are significantly increased when observing these human-like virtual avatars with emotional facial expression (e.g. angry faces and joyful smiles) than those avatars that have no facial expression at all. Also, research has demonstrated that the perception of emotion from virtual avatars is equally accurate for global audiences with different cultural backgrounds when facial animation is expressed from these avatars [37], which provides a clue for utilising facial expression to bridge cultural differences when designing character motion for high-fidelity virtual avatars. Although studies show that subjects prefer virtual avatars with cartoon-like appearances compared to high-fidelity virtual avatars, when reducing the fidelity of appearance and increasing the fidelity of facial expression, the cartoon-like avatar and the high-fidelity human-like avatar become equally favoured [29].

It has been discovered that human-like virtual avatars may cause an uncanny effect [38]. This is because these human-like avatars are perceived creepier than other avatars when performing only upper facial animation or only lower facial animation [32]. However, for avatars embodied to users with their appearance, people find it harder to control the same facial expression on the high-fidelity avatars compared to the cartoon-like avatars, and more facial animation is expressed from the users who are using a cartoon-like avatar as their embodied avatars [29]. Thus, due to the non-linear relationship between the high-fidelity motion and the high-fidelity appearance, it is essential to match the fidelity of appearance for virtual avatars when considering facial expression application.

3.2. Eye Gaze and Head Motion

As current technologies and theories make the investigation of the human body easier to approach, people have started uncovering the value of eye gaze and head motion on high-fidelity human-like virtual avatars. They have successfully made a significant step in increasing the affinity between users and virtual avatars in scenarios via utilising these two motions [39]. Some have found that eye gaze and head motion significantly impact identifying the portrayed personality of a high-fidelity virtual avatar [40]. In contrast, others have discovered that combining facial expression and head motion can make a high-fidelity virtual avatar more appealing [41]. Research has shown that head motion can positively affect high-fidelity virtual avatars, which increases motion fidelity, perceived emotional intensity, and user affinity for those avatars [42]. However, on the other side, the effect of motion is only sometimes positive. It is reported that applying eye gaze animation to virtual avatars with high fidelity human features can improve the quality of communication during interactions, while adding eye gaze animation (to low-fidelity virtual avatars) only makes the avatars creepier without having any improvements on the quality of communication [43].

When addressing the importance of upper body motion on high-fidelity human-like virtual avatars, most research focuses on improving the efficiency of machines, algorithms, and systems to generate high-fidelity movements. Only a few investigate the theory behind it, which can be presented as a question: “What, why, and how can this motion influence the perception of high-fidelity virtual avatars”. It is especially noticed that only a limited number of works are related to the effect of head motion when compared to the studies of facial expression and eye gaze on virtual avatars. Therefore, it is worth exploring the possibility of head motion in these areas.

4. The Intelligence of High-Fidelity Human-Like Virtual Avatars

Artificial Intelligent (AI) technology, which makes virtual avatars behave more human-like, especially the ability of reacting like a real human during interactions, has been fast becoming the main focus in HCI in recent years. AI agents, which can act like real humans to achieve a higher level of user engagement, are used not only in games [44] but also in other applications such as education, training [45], and health care [46]. As a result, the intelligence of virtual avatars is also becoming an important part of character design.

The effect of intelligence on virtual avatars is two-sided and has been argued over times. Some people support and encourage the development of the intelligence for virtual avatars, as this can benefit humans in many areas, especially when using big data and machine learning [47] to achieve intelligence in health care [48], making these virtual avatars react like a real human to feed user needs for interaction. For instance, some research suggests that using behavior intelligence, such as enhancing the emotional expressions of virtual avatars, in current applications is the key to achieve realistic human-computer interactions [49]. These studies can help display the virtual avatars’ intelligence and make them more human-like, and thus improve user experience. However, others vehemently disagree with this opinion and suspect that virtual avatars with high-fidelity artificial intelligence may threaten humans [50] and result in ethical issues in some situations. Therefore, those avatars are designed to stay less intelligent. Studies in [51] indicate that in some cases, virtual avatars with imprecise behaviours are perceived as more human-like than those withwith precise behaviours, which supports that less intelligence may be better for virtual avatars in a different way. As the debate of virtual avatars with high-fidelity on intelligence is complex and moral, it is hard to set a conclusion. The rest of the paper thus focuses on addressing high-fidelity approaches to the appearance and the motion of virtual avatars rather than considering the intelligence of virtual avatars.

5. Approaches to “High-Fidelity”

Technologies for creating high-fidelity human-like virtual avatars, such as robust algorithm investigation [52] on improving the quality of 3D modelling and motion tracking systems, are widely explored. The outcomes of those investigation results are used for supporting and technically achieving high fidelity. This chapter discusses current approaches on improving the appearance and motion fidelity of virtual avatars to provide advice for designing human-like virtual avatars in different applications.

5.1. Technical Approaches

Technical approaches for supporting high-fidelity virtual avatars are numerous, while deep learning [53,54] is one of the most popular methods in extensive areas, as it allows machines to get “trained” from a large amount of data and expectably make the machines work like a human.

Some focus on improving the appearance of those virtual avatars to a real human level via exploring realistic scanning and reconstructing applications to duplicate realistic human appearance, shape, and skin textures [55] to generate high-fidelity human-like virtual avatars. As the most complex part of the human body, the human face draws the most attention in those robust scanning and reconstructing tasks. Besides facial capture systems [17], current human face recognition [56] and tracking systems are popular as these novel achievements make tracking the appearance of a human face possible and accurate via a web camera, even with movements on the face and from multiple angles, which greatly improves capture process.

Other studies explore methods for applying high-fidelity motion to those virtual avatars to make them seemingly move actual humans. Motion recognition [57], motion tracking [58] and motion caption systems [59] play essential roles in the development of those techniques. Even though most tracking and recognition techniques do not directly transfer motion tracked from real humans to virtual avatars, still, those tracking techniques are qualified resources for applying reference to character motion design and can potentially fill the gap in current motion capture systems. This is because the existing motion capture systems are very expensive and require large spaces for installation, while most tracking systems only need a web camera. Also, some have bridged the gap by developing human facial expression synthesis systems [60] and facial expression reconstruction systems [61] via existing facial tracking systems, which opens the path for transferring the motion from a tracking system directly to a corresponded synthesis system. For instance, if a system wants to synthesis happy facial expressions for a virtual avatar, it can directly reconstruct the intended facial expression for that avatar by using the motion [62], which makes the capture of motion possible without using cameras, all be it only with a specialist head mounted display (HMD) that can detect one’s brain signals. This can make capturing one’s facial expression possible while wearing an HMD to avoid the constraints of traditional tracking systems. This is because traditional capture systems require cameras when wearing an HMD, but cameras can’t capture the upper facial expression (e.g. forehead and eyebrows), which results in a loss of motion track of the face.

However, the limitation of current tracking systems is unignorable. Most captured motion from web camera-based tracking systems can’t be used on virtual avatars directly, as motion retargeting is required based on different shapes of avatars. Even though current deep learning approaches, such as training deep learning networks to retarget one single input motion on multiple different face models simultaneously, have made the process for motion retargeting easier. However, this retargeting only works for trained models. For instance, it takes lots of effort (e.g. time) to train a new model via this particular deep learning network for motion retargeting [63]. Also, adjusting motion to fit a specific avatar personality can be very time-consuming if no actors can perform suitable motion to be captured by the system, especially during a situation like COVID-19 pandemic. Further, this capture becomes inaccurate when involving head motion as these systems often ignore the movement of the head. For instance, if a person rotates his head during the capture process, these capture systems may fail to track the motion as the original position of the face is changed. For motion tracking via EEG signals, specific sensors and avatar models are required. Thus, it can’t be used in many applications. Therefore, developing those capture systems, such as increasing the accuracy when involving the rotation of the head and keeping motion consistency when applied to different models, can be improved in the future.

Currently, applications have made the tracking system intelligent enough to track not only the movement of humans but also the emotional clues from detected facial expression and body gestures [64], which can be used as a reliable reference for guiding high-fidelity motion design. Moreover, the development of existing algorithms for certain systems, such as motion planning and autonomous vehicles [65] and healthcare systems [66], which utilise deep learning techniques to enhance the intelligence of those systems, has been extensively explored in different areas [67-69]. Even though the investigations doesn't directly improve the design of high-fidelity virtual avatars, those presented methods have good merits as they have a wide range of own focus points, such as improving processing speed or enhancing detecting accuracy [70-72], which can be combined with existing capture and reconstructing systems for achieving high-fidelity virtual avatars.

5.2. Hypothesis-Based Experiments

Modern virtual environments such as virtual reality [73], changing character features [74], and adjusting rendering styles , used to match the portrayed personality [3] , are common ways to achieve high-fidelity human-like virtual avatars on appearance, which can make avatars more appealing.

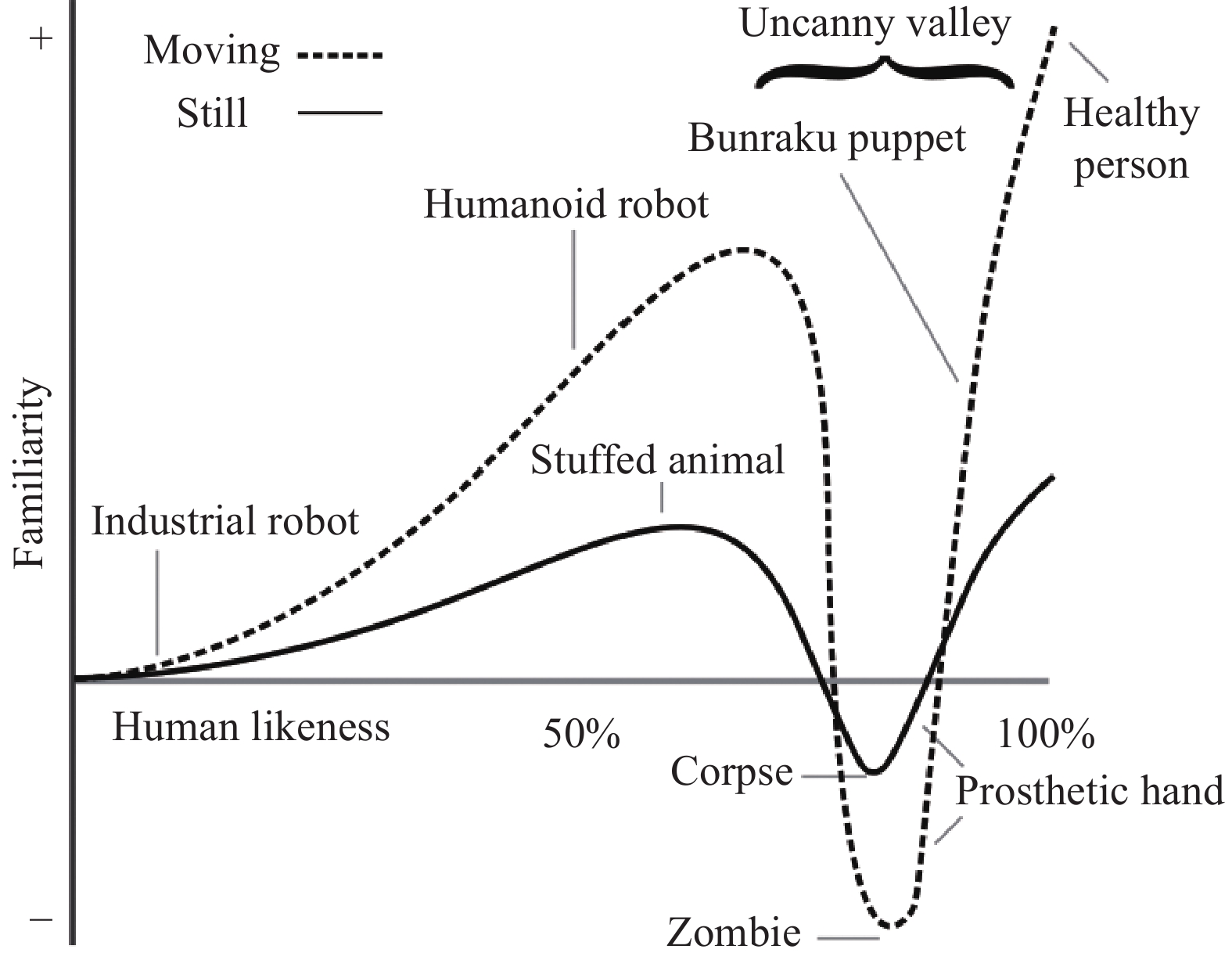

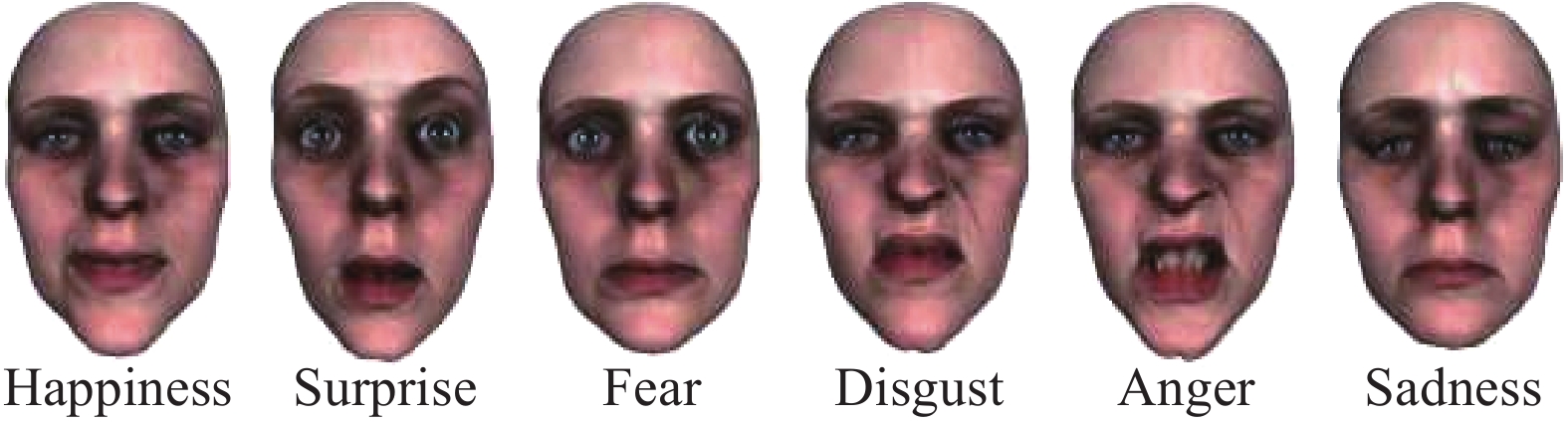

While considering improving the effectiveness of character motion, studies indicate that high–fidelity facial expression can be driven by perception from individuals (Figure 2) [75]. One suggestion when considering improving the effectiveness of eye gaze animation is making the eye gaze towards users rather than facing elsewhere during speaking interactions [76]. Further, head motion can also improve the fidelity of a virtual avatar and increase the affinity between the user and the avatar in specific emotional situations (Figure 3) [42].

Figure 2. High-fidelity facial expression in different emotional situations are synthesised based on subjects’ ratings.

Figure 3. Head motion increases the motion fidelity , perceived emotional intensity, and user affinity of virtual avatars in happy emotional situations in Virtual Reality(VR).

The expression of emotion from virtual avatars has become possible and easier with motion applied, according to recent literature. It has been confirmed that emotional content can make visual avatars more interactive and seemingly even more human-like by increasing the motion fidelity to empathise with the viewers [77]. Such emotional expression is not only limited to motion but also discovered that the lighting system can influence the perception of the emotional intensity of a virtual avatar, while brighter lighting causes the avatar to look happier [78]. The above methods provide clues to improve motion and appearance fidelity for avatars approaching high fidelity.

On the other side, research suggests that motion fidelity should match the appearance fidelity of a virtual avatar to achieve the subject’s expectation [43,79], which means that the higher appearance fidelity a human-like virtual avatar has the higher motion fidelity that it should have to avoid the uncanny valley. Also, some studies show that the acceptance of high-fidelity avatars as friends, colleagues, and assistants is significantly higher than having these avatars in professional roles such as judges or artists [80]. Therefore, it is a good choice to combine visual fidelity, motion fidelity, and specific situations, such as the role that the avatar would play, while interacting, together for avatar design.

Besides the experiment-based investigation mentioned above, the qualitative data, such as analysing opinions of individuals from case studies and interviews, is also an effective resource to track clues for creating high-fidelity human-like virtual avatars [26,81]. For example, as mentioned before, a middle-level fidelity human-like virtual avatar is sometimes preferred when users create their own avatars, which can represent themselves for interacting with others. However, some studies also indicate that high fidelity isn’t always a choice in live performance cases [28].

5.3. General Advice

The existence of a high-fidelity virtual avatar is meaningful. However, designing appropriate levels of fidelity for these avatars is still challenging. The uncanny is the most difficult challenge, as high-fidelity human-like virtual avatars can easily fall into the uncanny. This includes mismatching between appearance and motion fidelity of the avatar, lacking motion on the face, and so on. These make high-fidelity human-like virtual avatars sometimes less effective than other virtual avatars with less fidelity (e.g. cartoon-style virtual avatars). Thus, two general pieces of advice are conducted for designing high-fidelity virtual avatars based on the studies discussed.

For self-embodied virtual avatars: Allowing customised features to users while creating their own virtual avatars is a better choice than just applying a photo-realistic high-fidelity scan of users to build the avatar. This finding supports studies [82] which indicate that people tend to have virtual avatars that are different from their own bodies in a virtual world. Also, a “middle-level” fidelity (e.g. customised eye shape) on appearance is recommended in this situation.

For interactive virtual avatars: Utilising the advantage of high-fidelity motion can aid the character’s appearance as well as increase the affinity between users and avatars in some situations. This finding is similar to studies supporting that the combination of motion fidelity and graphical fidelity considerably benefits the design of interactive virtual avatars [83]. One suggestion is to combine motion fidelity, especially facial motion appropriately with appearance fidelity. For instance, portraying subtle details, such as applying positive emotion (e.g. always smiling) to avatars with happy personalities, may achieve a balanced design.

Besides the two general pieces of advice on character design, based on the literature, a list of applications that can use high-fidelity virtual avatars and those that should avoid have been conducted in Table 1 below:

Table 1. Fidelity recommendation of virtual avatars in a list of applications.

| Type of applications | Fidelity Level | Explanation |

| Medical training; self-therapy; education; | High-fidelity human-like virtual avatars | High-fidelity human-like virtual avatars increase user engagement in these applications. |

| lmmersive video games | “Middle-level” fidelity | Allowing customised features to users as a player can in crease user experience. |

| Live performance | No fidelity level required | No difference has been found between a wooden model and high-fidelity virtual avatars during live performances. |

In addition, high-fidelity human-like virtual avatars are more effective in increasing user engagement and user experience in specific character roles such as doctors, lawyers, and professors. Applications containing these character roles would consider high-fidelity human-like virtual avatars rather than cartoon-like virtual avatars.

6. Conclusion

This paper has reviewed recent works investigating the importance of high-fidelity human-like virtual avatars in avatar appearance, motion, and intelligence. It has been discovered that both appearance and motion are crucial to avatars. Also, the current technical approaches, especially via deep learning, have been discussed to improve the fidelity of virtual avatars. In conclusion, combining appearance and motion fidelity is a good choice for achieving a balanced avatar design from the approaches discussed. The combination of existing systems and algorithms may direct the design for those avatars to a different level. As the effect of high-fidelity virtual avatars depends on specific situations, the methods for avoiding the uncanny valley should be addressed accordingly.

Conflicts of Interest: The authors declare no conflict of interest.

Author Contributions: Qiongdan Cao: investigation, resources, writing–original draft and writing–review & editing; Hui Yu: Supervision and writing–review & editing; Paul Charisse: supervision; Si Qiao: supervision; Brett Stevens: supervision. All authors have read and agreed to the published version of the manuscript.

Funding: This research received no external funding.

References

- Autodesk. Arnoldrenderer Research. Available online: https://arnoldrenderer.com/research/ (accessed on 10 December 2022).

- Park, S.W.; Park, H.S.; Kim, J. H.; et al. 3D displacement measurement model for health monitoring of structures using a motion capture system. Measurement, 2015, 59: 352−362.

- Zibrek, K.; Kokkinara, E.; McDonnell, R. The effect of realistic appearance of virtual characters in immersive environments-does the character's personality play a role? IEEE Trans. Vis. Comput. Graph., 2018, 24: 1681−1690.

- Jo, D.; Kim, K.H.; Kim, G.J. Effects of avatar and background representation forms to co-presence in mixed reality (MR) tele-conference systems. In

SIGGRAPH ASIA 2016 Virtual Reality Meets Physical Reality :Modelling and Simulating Virtual Humans and Environments ,Macau ,China ,5 –8 December 2016 ; ACM: Macau, China, 2016; pp. 12. doi:10.1145/2992138.2992146 - Latoschik, M.E.; Roth, D.; Gall, D.; et al. The effect of avatar realism in immersive social virtual realities. In

Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology ,Gothenburg ,Sweden ,8 –10 November 2017 ; ACM: Gothenburg, 2017; pp. 39. doi:10.1145/3139131.3139156 - Garau, M. The Impact of Avatar Fidelity on Social Interaction in Virtual Environments. Ph.D. Thesis, University College London, London, UK, 2003.

- Mori, M.; MacDorman, K.F.; Kageki, N. The uncanny valley [from the field]. IEEE Robot. Autom. Mag., 2012, 19: 98−100.

- Hanson, D.; Olney, A.; Prilliman, S.; et al. Upending the uncanny valley. In

Proceedings of the 20th National Conference on Artificial Intelligence ,Pittsburgh ,Pennsylvania ,USA ,9 –13 July 2005 ; AAAI Press: Pittsburgh, 2005; pp. 1728–1729. - Geller, T. Overcoming the uncanny valley. IEEE Comput. Graph. Appl., 2008, 28: 11−17.

- Brenton, H.; Gillies, M.; Ballin, D.; et al. The uncanny valley: Does it exist. In

Proceedings of the 11th Conference of Human Computer Interaction ,Workshop on Human Animated Character Interaction ,Las Vegas ,NV ,USA , Lawrence Erlbaum Associates: Las Vegas, 2005. - Volante, M.; Babu, S.V.; Chaturvedi, H.; et al. Effects of virtual human appearance fidelity on emotion contagion in affective inter-personal simulations. IEEE Trans. Vis. Comput. Graph., 2016, 22: 1326−1335.

- Ries, B.; Interrante, V.; Kaeding, M.; et al. Analyzing the effect of a virtual avatar's geometric and motion fidelity on ego-centric spatial perception in immersive virtual environments. In

Proceedings of the 16th ACM Symposium on Virtual Reality Software and Technology ,Kyoto ,Japan ,18 –20 November 2009 ; ACM: Kyoto, 2009; pp. 59–66. doi:10.1145/1643928.1643943 - BANDAI NAMCO. Elden Ring. FromSoftware, Inc. 2022. Available online: https://en.bandainamcoent.eu/elden-ring/elden-ring (accessed on 4 July 2022).

- Cao, C.; Agrawal, V.; De La Torre, F.; et al. Real-time 3D neural facial animation from binocular video. ACM Trans. Graph., 2021, 40: 87.

- Yu, H.; Liu, H.H. Regression-based facial expression optimization. IEEE Trans. Hum. Mach. Syst., 2014, 44: 386−394.

- Wang, L.Z.; Chen, Z.Y.; Yu, T.; et al. FaceVerse: A fine-grained and detail-controllable 3D face morphable model from a hybrid dataset. In

IEEE/CVF Conference on Computer Vision and Pattern Recognition ,New Orleans ,LA ,USA ,18 –24 June 2022 0; IEEE: New Orleans, 2022; pp. 20301–20310. doi:10.1109/CVPR52688.2022.01969 - Zhang, S.; Yu, H.; Wang, T.; et al. Linearly augmented real-time 4D expressional face capture. Inf. Sci., 2021, 545: 331−343.

- Zall, R.; Kangavari, M.R. Comparative analytical survey on cognitive agents with emotional intelligence. Cognit. Comput., 2022, 14: 1223−1246.

- D’Avella, S.; Camacho-Gonzalez, G.; Tripicchio, P. On Multi-Agent Cognitive Cooperation: Can virtual agents behave like humans? Neurocomputing, 2022, 480: 27−38.

- Thompson, J.; White, S.; Chapman, S. Interactive clinical avatar use in pharmacist preregistration training: Design and review. J. Med. Internet Res., 2020, 22: e17146.

- Sinatra, A.M.; Pollard, K.A.; Files, B.T.; et al. Social fidelity in virtual agents: Impacts on presence and learning. Comput. Hum. Behav., 2021, 114: 106562.

- Machidon, O.M.; Duguleana, M.; Carrozzino, M. Virtual humans in cultural heritage ICT applications: A review. J. Cult. Herit., 2018, 33: 249−260.

- Foni, A.E.; Papagiannakis, G.; Magnenat-Thalmann, N. Virtual Hagia Sophia: Restitution, visualization and virtual life simulation. In

UNESCO World Heritage Congress (Vol .2 ); 2002. - Stefanova, M.; Pillan, M.; Gallace, A. Influence of realistic virtual environments and humanlike avatars on patients with social phobia. In

International Design Engineering Technical Conferences and Computers and Information in Engineering Conference ,17–19 August 2021 ; ASME, 2021; pp. V002T02A081. doi:10.1115/DETC2021-70265 - Mousas, C.; Koilias, A.; Rekabdar, B.; et al. Toward understanding the effects of virtual character appearance on avoidance movement behavior. In

2021 IEEE Virtual Reality and 3D User Interfaces (VR ),Lisboa ,Portugal ,27 March 2021–1 April 2021 ; IEEE: Lisboa, 2021; pp. 40–49. doi:10.1109/VR50410.2021.00024 - Hube, N.; Angerbauer, K.; Pohlandt, D.; et al. VR collaboration in large companies: An interview study on the role of avatars. In

2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct ),Bari ,Italy ,4 –8 October 2021 ; IEEE: Bari, 2021; pp. 139–144. doi:10.1109/ISMAR-Adjunct54149.2021.00037 - Waltemate, T.; Gall, D.; Roth, D.; et al. The impact of avatar personalization and immersion on virtual body ownership, presence, and emotional response. IEEE Trans. Vis. Comput. Graph., 2018, 24: 1643−1652.

- Gochfeld, D.; Benzing, K.; Laibson, K.; et al. Avatar selection for live performance in virtual reality: A case study. In

2019 IEEE Games ,Entertainment ,Media Conference (GEM ),New Haven ,CT ,USA ,18 –21 June 2019 ; IEEE: New Haven, 2019; pp. 1–5. doi:10.1109/GEM.2019.8811548 - Ma, F.; Pan, X.N. Visual fidelity effects on expressive self-avatar in virtual reality: First impressions matter. In

2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR ),Christchurch ,New Zealand ,12 –16 March 2022 ; IEEE: Christchurch, 2022; pp. 57–65. doi:10.1109/VR51125.2022.00023 - Herrera, F.; Oh, S.Y.; Bailenson, J.N. Effect of behavioral realism on social interactions inside collaborative virtual environments. Presence: Teleoperat. Virtual Environ., 2018, 27: 163−182.

- Rogers, S.L.; Broadbent, R.; Brown, J.; et al. Realistic motion avatars are the future for social interaction in virtual reality. Front. Virtual Real., 2022, 2: 750729.

- Tinwell, A.; Grimshaw, M.; Nabi, D.A.; et al. Facial expression of emotion and perception of the Uncanny Valley in virtual characters. Comput. Hum. Behav., 2011, 27: 741−749.

- McDonnell, R.; Larkin, M.; Hernández, B.; et al. Eye-catching crowds: Saliency based selective variation. ACM Trans. Graph., 2009, 28: 55.

- Jack, R.E.; Garrod, O.G.B.; Yu, H.; et al. Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. USA, 2012, 109: 7241−7244.

- Gonzalez-Franco, M.; Steed, A.; Hoogendyk, S.; et al. Using facial animation to increase the enfacement illusion and avatar self-identification. IEEE Trans. Vis. Comput. Graph., 2020, 26: 2023−2029.

- Bai, Z.C.; Yao, N.M.; Mishra, N.; et al. Enhancing emotional experience by building emotional virtual characters in VR volleyball games. Comput. Animat. Virtual Worlds, 2021, 32: e2008.

- Yun, C.; Deng, Z.G.; Hiscock, M. Can local avatars satisfy a global audience? A case study of high-fidelity 3D facial avatar animation in subject identification and emotion perception by US and international groups Comput. Entertain., 2009, 7: 21.

- Hodgins, J.; Jörg, S.; O'Sullivan, C.; et al. The saliency of anomalies in animated human characters. ACM Trans. Appl. Percept., 2010, 7: 22.

- Ruhland, K.; Zibrek, K.; McDonnell, R. Perception of personality through eye gaze of realistic and cartoon models. In

Proceedings of the ACM SIGGRAPH Symposium on Applied Perception ,Tübingen ,Germany ,13 –14 September 2015 ; ACM: Tübingen, 2015; pp. 19–23. doi:10.1145/2804408.2804424 - Ruhland, K.; Peters, C.E.; Andrist, S.; et al. A review of eye gaze in virtual agents, social robotics and HCI: Behaviour generation, user interaction and perception. Comput. Graph. Forum, 2015, 34: 299−326.

- Kokkinara, E.; McDonnell, R. Animation realism affects perceived character appeal of a self-virtual face. In

Proceedings of the 8th ACM SIGGRAPH Conference on Motion in Games ,Paris ,France ,16 –18 November 2015 ; ACM: Paris, 2015; pp. 221–226. doi:10.1145/2822013.2822035 - Cao, Q.D.; Yu, H.; Nduka, C. Perception of head motion effect on emotional facial expression in virtual reality. In

2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW ),Atlanta ,GA ,USA ,22 –26 March 2020 ; IEEE: Atlanta, 2020; pp. 750–751. doi:10.1109/VRW50115.2020.00226 - Garau, M.; Slater, M.; Vinayagamoorthy, V.; et al. The impact of avatar realism and eye gaze control on perceived quality of communication in a shared immersive virtual environment. In

Proceedings of the SIGCHI Conference on Human Factors in Computing Systems ,Ft .Lauderdale ,FL ,USA ,5 –10 April 2003 ; ACM: Ft. Lauderdale, 2003; pp. 529–536. doi:10.1145/642611.642703 - Petrović, V.M. Artificial intelligence and virtual worlds-toward human-level AI agents. IEEE Access, 2018, 6: 39976−39988.

- Norouzi, N.; Kim, K.; Hochreiter, J.; et al. A systematic survey of 15 years of user studies published in the intelligent virtual agents conference. In

Proceedings of the 18th International Conference on Intelligent Virtual Agents ,Sydney ,Australia ,5 –8 November 2018 ; ACM: Sydney, 2018; pp. 17–22. doi:10.1145/3267851.3267901 - Luerssen, M.H.; Hawke, T. Virtual agents as a service: Applications in healthcare. In

Proceedings of the 18th International Conference on Intelligent Virtual Agents ,Sydney ,Australia ,5 –8 November 2018 ; ACM: Sydney, 2018; pp. 107–112. doi:10.1145/3267851.3267858 - Alicja, K.; Maciej, M. Can AI see bias in X-ray images. Int. J. Network Dyn. Intell., 2022, 1: 48−64.

- Jiang, F.; Jiang, Y.; Zhi, H.; et al. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol., 2017, 2: 230−243.

- Zhao, G.Y.; Li, Y.T.; Xu, Q.R. From emotion AI to cognitive AI. Int. J. Network Dyn. Intell., 2022, 1: 65−72.

- Bosse, T.; Hartmann, T.; Blankendaal, R.A.M.; et al. Virtually bad: A study on virtual agents that physically threaten human beings. In

Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems ,Stockholm ,Sweden ,10 –15 July 2018 ; International Foundation for Autonomous Agents and Multiagent Systems: Stockholm, 2018; pp. 1258–1266. - Schmidt, S.; Zimmermann, S.; Mason, C.; et al. Simulating human imprecision in temporal statements of intelligent virtual agents. In

CHI Conference on Human Factors in Computing Systems ,New Orleans ,LA ,USA ,29 April 2022 –5 May 2022 ; ACM: New Orleans, 2022; pp. 422. doi:10.1145/3491102.3517625 - Aneja, D.; McDuff, D.; Shah, S. A high-fidelity open embodied avatar with lip syncing and expression capabilities. In

2019 International Conference on Multimodal Interaction ,Suzhou ,China ,14 –18 October 2019 ; ACM: Suzhou, 2019; pp. 69–73. doi:10.1145/3340555.3353744 - Niu, Z.Y.; Zhong, G.Q.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing, 2021, 452: 48−62.

- Liu, S.M.; Xia, Y.F.; Shi, Z.S.; et al. Deep learning in sheet metal bending with a novel theory-guided deep neural network. IEEE/CAA J. Autom. Sin., 2021, 8: 565−581.

- Vaitonytė, J.; Blomsma, P.A.; Alimardani, M.; et al. Realism of the face lies in skin and eyes: Evidence from virtual and human agents. Comput. Hum. Behav. Rep., 2021, 3: 100065.

- Lu, P.; Song, B.Y.; Xu, L. Human face recognition based on convolutional neural network and augmented dataset. Syst. Sci. Control Eng., 2021, 9: 29−37.

- Xia, Y.F.; Yu, H.; Wang, X.; et al. Relation-aware facial expression recognition. IEEE Trans. Cognit. Dev. Syst., 2022, 14: 1143−1154.

- Ahmed, I.; Din, S.; Jeon, G.; et al. Towards collaborative robotics in top view surveillance: A framework for multiple object tracking by detection using deep learning. IEEE/CAA J. Autom. Sin., 2021, 8: 1253−1270.

- Movella Inc. Motion Capture. Available online: https://www.xsens.com/motion-capture (accessed on 10 2022).

- Xia, Y.F.; Zheng, W.B.; Wang, Y.M.; et al. Local and global perception generative adversarial network for facial expression synthesis. IEEE Trans. Circuits Syst. Video Technol., 2022, 32: 1443−1452.

- Lou, J.W.; Wang, Y.M.; Nduka, C.; et al. Realistic facial expression reconstruction for VR HMD users. IEEE Trans. Multimedia, 2020, 22: 730−743.

- Laustsen, M.; Andersen, M.; Xue, R.; et al. Tracking of rigid head motion during MRI using an EEG system. Magn. Reson. Med., 2022, 88: 986−1001.

- Zhang, J.Y.; Chen, K.Y.; Zheng, J.M. Facial expression retargeting from human to avatar made easy. IEEE Trans. Vis. Comput. Graph., 2022, 28: 1274−1287.

- Zhang, X.G.; Yang, X.X.; Zhang, W.G.; et al. Crowd emotion evaluation based on fuzzy inference of arousal and valence. Neurocomputing, 2021, 445: 194−205.

- Ye, F.; Zhang, S.; Wang, P.; et al. A survey of deep reinforcement learning algorithms for motion planning and control of autonomous vehicles. In

2021 IEEE Intelligent Vehicles Symposium (IV ),Nagoya ,Japan ,11 –17 July 2021 ; IEEE: Nagoya, 2021; pp. 1073–1080. doi:10.1109/IV48863.2021.9575880 - Yue, W.B.; Wang, Z.D.; Zhang, J.Y.; et al. An overview of recommendation techniques and their applications in healthcare. IEEE/CAA J. Autom. Sin., 2021, 8: 701−717.

- He, X.; Pan, Q.K.; Gao, L.; et al. A greedy cooperative Co-evolution ary algorithm with problem-specific knowledge for multi-objective flowshop group scheduling problems.

IEEE Trans .Evol .Comput .2021 , in press. doi:10.1109/TEVC.2021.3115795 - Liu, W.B.; Wang, Z.D.; Zeng, N.Y.; et al. A novel randomised particle swarm optimizer. Int. J. Mach. Learn. Cybern., 2021, 12: 529−540.

- Zhang, K.; Su, Y.K.; Guo, X.W.; et al. MU-GAN: Facial attribute editing based on multi-attention mechanism. IEEE/CAA J. Autom. Sin., 2021, 8: 1614−1626.

- Wang, Y.M.; Dong, X.H.; Li, G.F.; et al. Cascade regression-based face frontalization for dynamic facial expression analysis. Cognit. Comput., 2022, 14: 1571−1584.

- Gou, C.; Zhou, Y.C.; Xiao, Y.; et al. Cascade learning for driver facial monitoring. IEEE Trans. Intell. Veh., 2023, 8: 404−412.

- Sharma, S.; Kumar, V. Voxel-based 3D face reconstruction and its application to face recognition using sequential deep learning. Multimed. Tools Appl., 2020, 79: 17303−17330.

- Sherman, W.R.; Craig, A.B.

Understanding Virtual Reality :Interface ,Application ,and Design ; Morgan Kaufmann: Boston, USA, 2003. - Zell, E.; Aliaga, C.; Jarabo, A.; et al. To stylize or not to stylize?: The effect of shape and material stylization on the perception of computer-generated faces ACM Trans. Graph., 2015, 34: 184.

- Yu, H.; Garrod, O.G.B.; Schyns, P.G. Perception-driven facial expression synthesis. Comput. Graph., 2012, 36: 152−162.

- Andrist, S.; Pejsa, T.; Mutlu, B.; et al. Designing effective gaze mechanisms for virtual agents. In

Proceedings of the SIGCHI Conference on Human Factors in Computing Systems ,Austin ,TX ,USA ,5 –10 May 2012 ; ACM: Austin, 2012; pp. 705–714. doi:10.1145/2207676.2207777 - Bopp, J.A.; Müller, L.J.; Aeschbach, L.F.; et al. Exploring emotional attachment to game characters. In

Proceedings of the Annual Symposium on Computer-Human Interaction in Play ,Barcelona ,Spain ,22 –25 October 2019 ; ACM: Barcelona, 2019; pp. 313–324. doi:10.1145/3311350.3347169 - Wisessing, P.; Zibrek, K.; Cunningham, D.W.; et al. Enlighten me: Importance of brightness and shadow for character emotion and appeal. ACM Trans. Graph., 2020, 39: 19.

- Bailenson, J.N.; Beall, A.C.; Blascovich, J. Gaze and task performance in shared virtual environments. J. Visual. Comput. Anim., 2002, 13: 313−320.

- Sharma, M.; Vemuri, K. Accepting human-like avatars in social and professional roles. ACM Trans. Hum. Robot Interact., 2022, 11: 28.

- Freeman, G.; Zamanifard, S.; Maloney, D.; et al. My body, my avatar: How people perceive their avatars in social virtual reality. In

Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems ,Honolulu ,HI ,USA ,25 –30 April 2020 ; ACM: Honolulu, 2020; pp. 1–8. doi:10.1145/3334480.3382923 - Ducheneaut, N.; Wen, M.H.; Yee, N.; et al. Body and mind: A study of avatar personalization in three virtual worlds. In

Proceedings of the SIGCHI Conference on Human Factors in Computing Systems ,Boston ,MA ,USA ,4 –9 April 2009 ; ACM: Boston, 2009; pp. 1151–1160. doi:10.1145/1518701.1518877 - Pan, X.N.; Hamilton, A.F.D.C. Why and how to use virtual reality to study human social interaction: The challenges of exploring a new research landscape. Br. J. Psychol., 2018, 109: 395−417.